Testing Approach

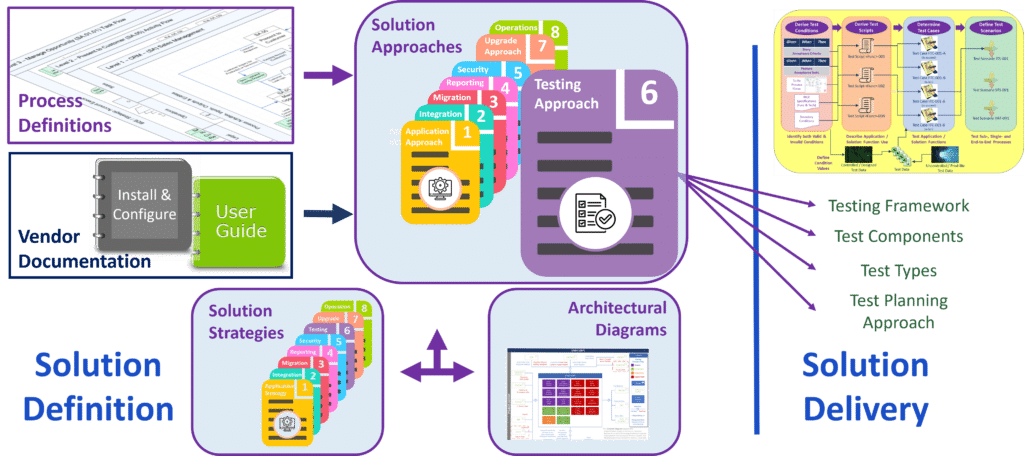

The sixth of eight (6 of 8) artifacts in the Solution Approach series is the Testing Approach. Basically, this Approach connects the higher-level guidance of the Testing Strategy with corresponding Solution Delivery content.

Additionally, it offers guidance for the design and development of individual Test Plans. Each Plan describes an individual test cycle associated with the Solution.

To begin, use a copy of the upper portion of this page as the basis, or template, for this Approach. Thereafter, follow the 'How to Develop the Testing Approach' steps in the lower portion to complete this table-driven (i.e., fill in the blanks) artifact for a specific Solution.

Begin Template

Solution Approach 6 of 8 - Testing

The series of artifacts outlining an Approach for this Solution continues with Testing. Basically, each Approach bridges a gap between the guidelines and guardrails found in its corresponding, higher-level Testing Strategy, and the subsequent execution of iterative Solution Delivery.

By working through this Approach, an initiative defines the testing sufficient to validate Solution change. Unlike most other Approach artifacts, this one does not add Features to the Solution Backlog. Rather, it helps define where, when, and how to work items added to the backlog. For the most part, that involves making and testing various types of Solution change.

This Approach builds upon that which appears in the corresponding Application Approach. Refer to that document for additional information.

Introduction

Individual Solutions each have different scope, different applications, different technologies, and different means by which affect changes. For most, permissible changes can occur through configuration, operation, and various abilities to customize and/or extend Solution functionality. Accordingly, each has differing testing needs as well.

Having covered the Test Components and Test Types applicable to this Solution in the Testing Strategy, this Approach describes how to conduct testing of the Solution and changes to it. In short, it defines test development and execution as they apply to this Solution.

The contents of this Approach allow all stakeholders to better understand the work related to any Solution change, from basic configuration to complex customization. To be sure, every implementation requires testing. Even those which plan to be 'vanilla', or to not allow customizations, still require a great deal of testing. In fact, expect testing to take up the most time and implementation effort.

Objective

The objective of this document is to explain aspects of testing as they apply to this Solution. It explains how to apply overall concepts described in the Testing Strategy to best suit the needs of the Solution. It also provides specific orientation on the design, build, and use of test materials which anyone involved in testing should follow.

In general, this Approach bridges the gap between the overall Testing Strategy and individual Test Plans. Test Plans describe items specific and unique to each Solution test cycle. As a result, individual Test Plans need only reference this Approach rather than including relevant content in each plan. In other words, this Testing Approach covers most of the topics that should be consistent throughout all test cycles, and hence redundant if left to the individual Test Plans.

Audience

The audience for the Approach includes Solution Managers, Architects, Business / Process Owners and Team(s) who will design, build, and approve the actual Tests. In general, this Testing Approach enables stakeholders to make initial estimates of test-related work. As a result, they are better positioned to manage expectations and organize delivery Teams. For instance, a Solution with a lot of change might benefit by having one than one Team member dedicated to Test design and development. Likewise, a Solution planning to use Candidate Releases will benefit by using a Quality Assurance group, separate from individual Teams. Regardless of the work division, anyone planning, designing, and developing Tests will find this Approach offers them a valuable head start.

Sections Overview

The sections in this Approach describe Test Development & Execution. Basically, they define 'when' to use various Test Types, 'where' to use each type, and 'how' to build various Tests.

To succeed with an iterative approach, participants must produce more Test Components than the changes they seek to validate. Accordingly, estimates of Story and Feature size should include test-related efforts. That is, estimates should not just consider the changes. Rather, they must include both the changes and the work to validate the changes.

Moreover, unlike making each change, which typically occurs once, validate may occur many times over. In short, testing comprises a larger portion of Solution Delivery than the changes they validate.

The Approach seeks to structure Testing efforts to:

- Facilitate successful testing of any Business Processes or Technology Enablers which the Solution supports.

- Segment delivery work and related test activities to allow for improved management of scope and tasks, as well as clearer reporting and communication of work in progress.

- Ensure that new Solution Modules – both delivered and customized – work together to provide the functionality required by the Customer.

- Provide an educational opportunity to select End-Users of the new Solution by providing them with well scripted "test drives".

- Ensure successful completion of testing on time to meet planned Release dates and ensure smooth Rollout(s) to the Customer.

- Guide the design and development of test materials to:

- Facilitate reuse.

- Reduce the time and effort required to plan, build and execute tests; and

- Improve the quality of test coverage and reporting.

- Encourage use of TMS features to better track and report test progress and to simplify defect management.

- Formalize testing principles, assumptions, roles, and responsibilities.

Section 1 - Testing Stages

Like the overall Solution itself, Testing has a lifecycle. In short, every Test progresses through six (6) Stages. Similarly, because they relate to iterative Solution Delivery, each stage occurs over and over. Every stage involves a certain amount of effort, and these efforts should factor into the planning, estimating, and delivery of every Feature and Story.

Many descriptions of a Software Testing Life Cycle (STLC) place Testing ownership in a QA group. However, in an iterative model like the ITM, ownership must apply both to Teams, which are responsible for some testing, and to any QA group, which may be responsible for other testing - if the Solution involves a QA group.

To clarify, there are not two separate Approaches, one for Teams and another for QA. Rather, there is a single, holistic Approach for which Teams and QA share responsibility. To phrase it differently, if an implementation does not involve a QA group, then Team(s) themselves must pick up any testing for which a QA group would be responsible.

The 6 stages provide context to the overall Testing effort. Moreover, they relate to the subsequent topics in this Approach. To be clear, all stages apply to both Teams and QA, if involved.

Stage 1: Requirements Analysis

In most cases, requirements identification begins even before a Solution's initial definition. Indeed, relevant requirements may originate from:

- Requests for Change.

- Business Process Definitions, or as summarized in the Process Requirements Matrix.

- Solution Approach documents.

- Epic Definitions; and

- Analysis & Design workshops, meetings, and interviews.

Regardless of their source, the objective is to capture and place all requirements in one of two proper locations: Features or Stories.

In both cases, Acceptance Criteria (AC) are the mechanism to capture and define requirements. Indeed, finalizing the relevant AC is the last step in making any Feature "Ready" for a Release Planning Event. Similarly, it is the final step in making any Story "Ready" for a Sprint-Planning Event.

Stage 2: Test Cycle Planning

Test Planning is about figuring out how to efficiently and effectively validate the host of changes anticipated during a pending Release and its Sprints. Test Plans inform relevant stakeholders of how testing will commence and progress. Ideally, each plan should clearly demonstrate how test results will build stakeholder trust in the corresponding Solution Components.

The corresponding Testing Strategy defined Test Components and Test Types relevant to the Solution. Below, this Approach defines which Test Types to associate with Sprints, and which to associate with Releases. For each Sprint or Release increment, each relevant Test Type receives its own Test Plan. As with Test Types, Test Plans also build upon one another. That is, validations conducted in a prior plan become a foundation for latter plans. As a result, there is little need to execute the same Tests in multiple plans. The start and end of each Sprint and Release iteration mark the start and end of each corresponding Test Cycle. To clarify, each Test Cycle includes Test Plans for each Test Type to execute within the given iteration.

When preparing Test Plans, testers should merely reference the Testing Strategy and Testing Approach rather than repeat content that would otherwise need to appear in such plans.

Stage 3: Test Design & Development

Per Test Plans, Team(s) and/or QA members (as appropriate) begin to assemble the Test Components each plan calls for. Basically, this involves adding and/or updating:

- Test Scripts

- Test Cases

- Test Scenarios; and

- Test Datasets.

Of course, this is the time to seek re-use opportunities. For example, to extend an existing script(s) for new functionality rather than build one from scratch. Likewise, to add values to an existing dataset to address new requirements rather than recreating what already exists.

This is also the time to identify and create / update Automated Tests. Design and automate reusable Tests that may repeat frequently over the life of the Solution. For instance, any function undergoing Regression Testing. Most automated tests are built from initial manual tests. The sooner automation of such tests can occur, the sooner resources free up for additional, deeper testing.

Stage 4: Test Environment Setup

Most Solutions make use of multiple Environments, or instances, over their lifecycle. Indeed, many use separate Environments for development, testing, and production. Although, even more can often speed the pace and quality of delivery. For instance, they may allow more tasks to occur in parallel, rather than sequentially, meaning more work occurs in less time. Similarly, they segment tasks which may conflict with one another.

Each Solution must designate which tests are appropriate for each Environment. To be sure, not all tests belong in the same place at the same time. For instance, it is best to segment tests which predominately rely upon controlled data from those which primarily use uncontrolled data. Similarly, Functional Tests seek to validate change as it happens, whereas System Tests cannot validate change until it's complete. Of course, mixing can occur. However, it requires much more design and controls to avoid Test Types conflicting with one another.

Moreover, Environments should allow for periodic refresh, or a staging, to make each ready for use. As a result, this allows for the reuse of many tests and datasets, improving overall quality and coverage. Refer to the Operations Approach for more about Environments.

Stage 5: Test Execution

Unit and Functional testing should occur in roughly in parallel with Solution modification and/or configuration. Changes which pass those tests progress on to Integration testing within the scope of work applicable to the current Sprint, and to the aggregation of Sprints within the current Release.

Most problems identified during each Sprint are merely directed to the appropriate Team member to resolve. In other words, a Defect is not opened until after the related Story or Feature is 'Done'. This cycle of change a little, then test a little occurs until the owners accept the corresponding Story or Feature as being 'Done'. To emphasize, there is no way to reach "Done" without a defined amount of successful testing.

Even after individual Stories and Features are 'Done', most Solutions require further testing to ensure that all accepted changes function together properly. This is often the transition from Team-level testing which occurs before a change is 'Done', to QA-level testing which involves only those changes which are 'Done'. Similarly, this is often where testing shifts from Sprint-level to Release-level. Additional validation may involve System and NFR testing. Thereafter, changes which pass those tests become available for UAT, the final check before Production deployment.

Stage 6: Test Cycle Closure

A key aspect of Test Execution is capturing Expected vs Actual results. These are what determine whether the configurations and changes meet the defined Acceptance Criteria. Cycle Closure is the final Stage of a Test's Lifecycle. Here the testers present a Test Cycle Closure Report. Basically, the report summarizes the cycle deliverables:

- Test Components Created / Updated (by type).

- Tests Executed / Execution Results (by type).

- Highlights and explanation of unresolved Issues; and

- Summary of Defects Created / Remediated.

Ideally, tools should allow testers to map the above deliverables to corresponding Features in each Release, and Stories in each Sprint.

These deliverables provide supporting info to the Solution Owner and other key stakeholders such as Business/Process Owners and Epic Owners. On behalf of all stakeholders, the Solution Owner either 'Accepts' or 'Rejects' the changes and test results that correspond to a Story or Feature. Those accepted are now 'Done', and progress towards Production "as is", meaning no further change is expected. Otherwise, those rejected return for more work in subsequent iterations.

Section 2 - Testing Targets

For something as complex as a packaged enterprise application, it is not reasonable to expect all testing to occur in one place, or at one time. For instance, no one should expect User Acceptance Testing to occur at the same time, or in the same place, as development. Similarly, it is not practical for some piece of functionality to undergo both Functional and System Testing at the same time or in the same place.

In one sense, this reflects that different Test Types are meant to occur at different points in a change's evolution. Indeed, some types seek to validate a change in isolation, and to delve into greater detail using controlled data. Conversely, other types seek to validate a change in broader contexts, ensuring it works with upstream and downstream functionality, using both controlled and uncontrolled data.

In another sense, this also reflects who to involve in various tests. Initially, Teams who make a change should also test each to validate their work, before sending it along to others for review and approval. Afterwards, stakeholders who are not close to individual changes should provide additional verification that the overall Solution, including relevant changes, works as desired. So, how can Solutions segment and control testing?

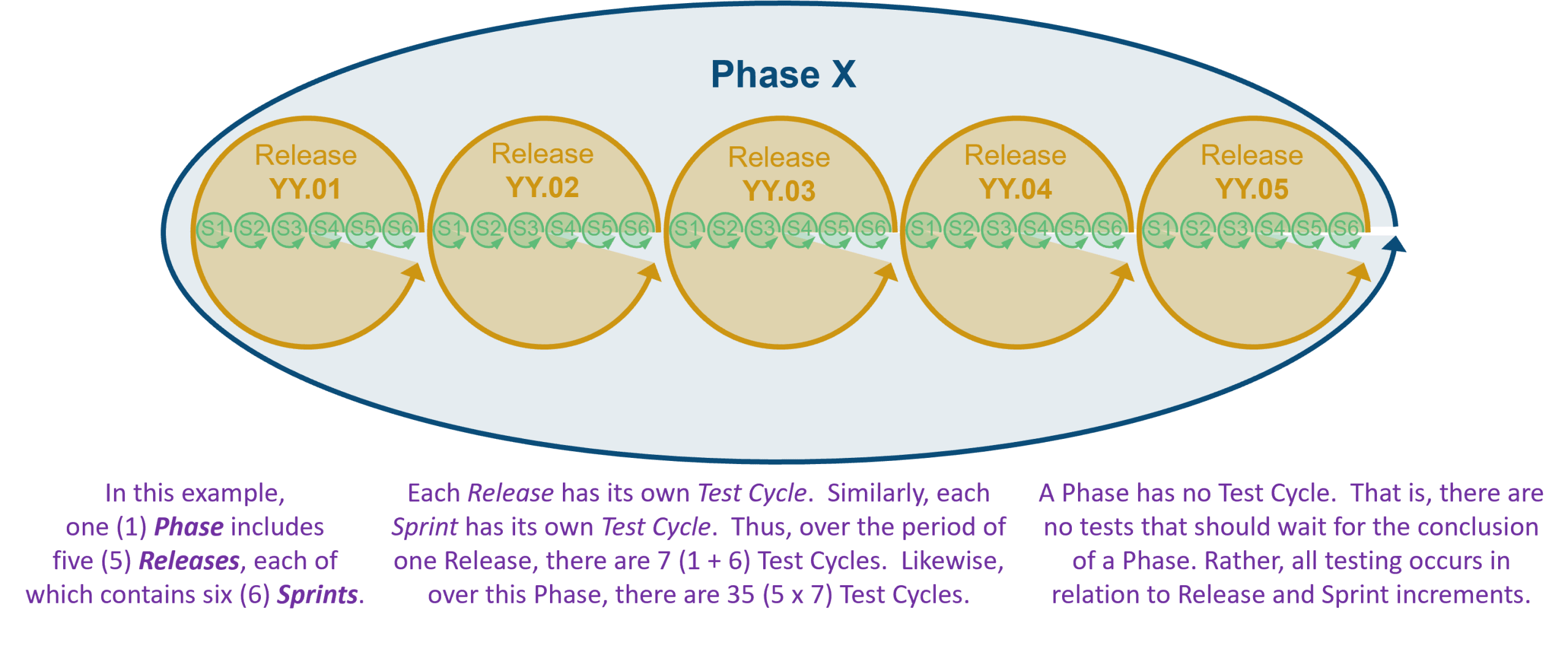

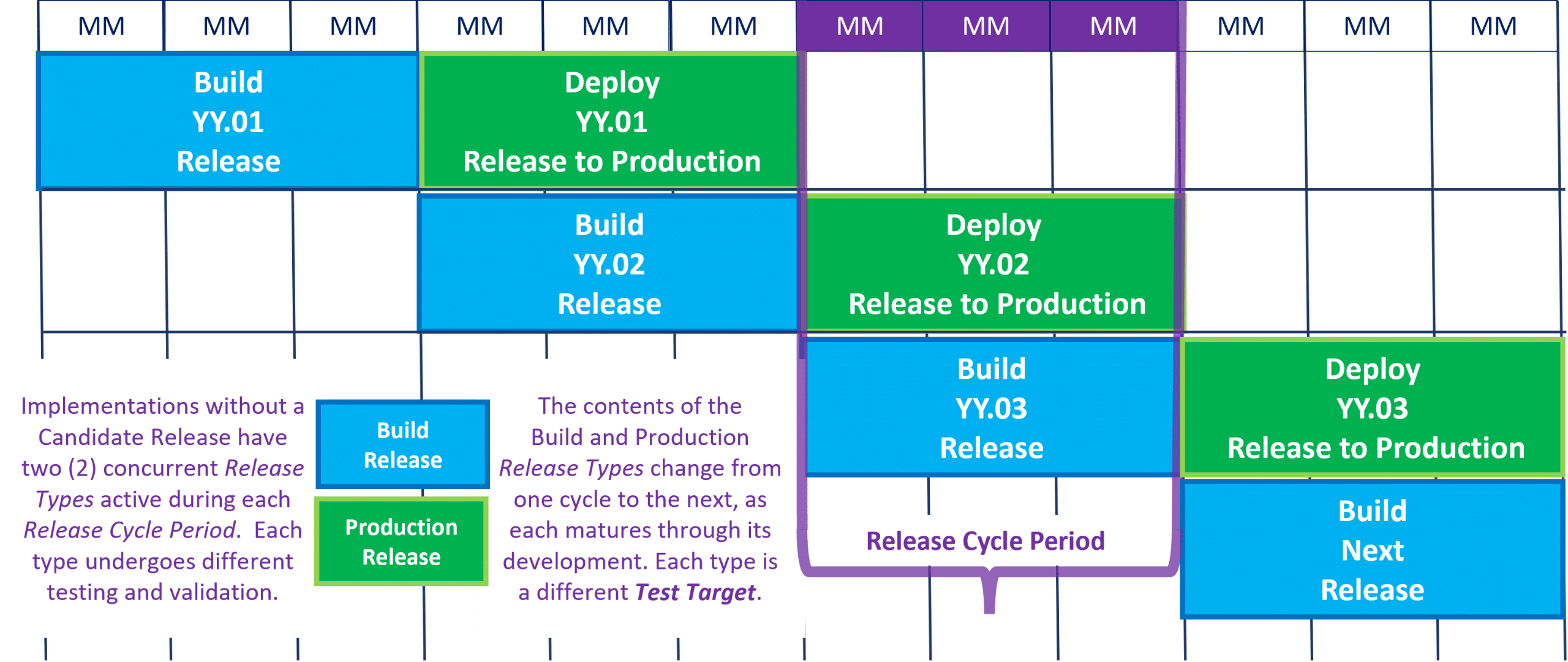

Relating Release Cycles to Test Cycles

Of a Solution's three basic increments - long-term Phases, medium-term Releases, and short-term Sprints - the medium-term offers the most effective increment by which to orchestrate change. Like Goldilocks and The Three Bears, it's not too big, it's not too small, it's just right.

To explain, consider any typical Solution. In the beginning, Teams implement changes to the out-of-the-box application. Whether such changes relate to installation, configuration, RICE objects, operational setup, or any other reason, each change warrants testing.

Of course, implementation of any changes should occur within the scope of a single Sprint. However, given their short term, and limited scope, Sprints are not the appropriate increment for all testing. Although, they most definitely are appropriate for some testing.

Changes progress from being "Ready" for change towards the change being "Done". Generally, Stories are "Done" during Sprints, while Features achieve "Done" over a Release. Because there is no object larger than a Feature to be "Done", no increment longer than a Release requires testing.

In a relatively short period of time, a Solution has (or should have) a Release increment, or version, in Production. In most cases, this is not the entire Solution. Rather, it is a step in the right direction. Thereafter, more steps towards a future state follow.

At the same time, as long more changes are approved, Teams continue work on another, newer version, or Release increment, to enable more features and additional functionality.

Iterations in which to Test

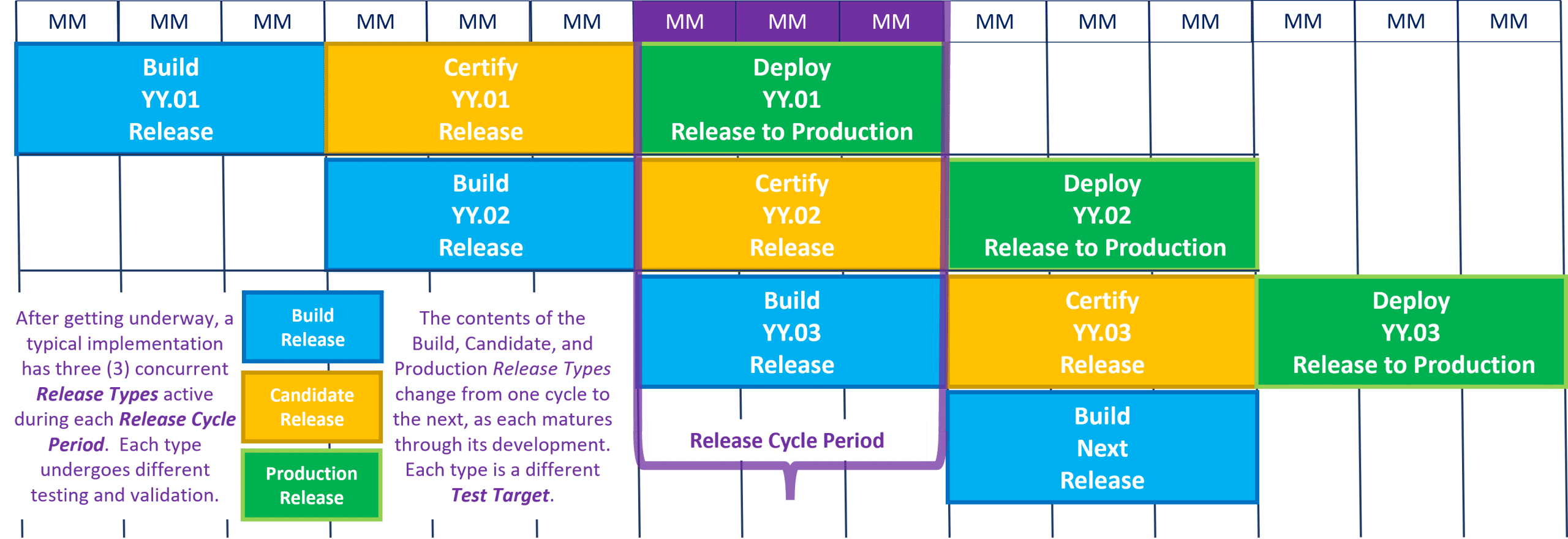

Many Solutions also verify a version between Build and Production - the Candidate. Indeed, a Release increment often needs further validation after it reaches a steady state. The incremental validation (a.k.a. certification) not only includes the full set of application changes, but also their interaction with new Integrations and/or Data Migrations. Likewise, there is often a need to verify a potential Release against other, related Solutions, which themselves may be in similar states of change.

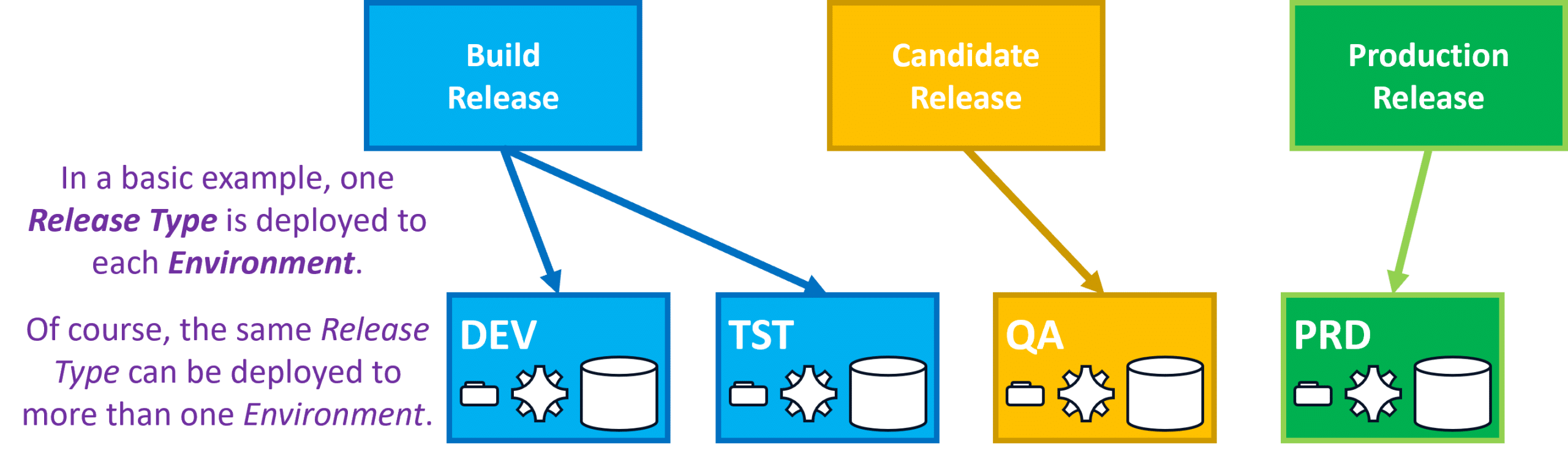

As described below, Testers may refer to the three increments as the Build-Release, the Candidate-Release, and the Production-Release. For each increment, some Test Types, and hence some Test Cycles, are appropriate, while others are not. Accordingly, it is helpful to group Test Cycles with the Release Cycle to which they most closely relate.

Release Advancement

There are several ways in which tests of various types can occur at different times and in different locations. Of course, the most obvious means to segment such tests is by executing them in different Environments. Although, perhaps a more important means for segmenting tests is by understanding what each Environment contains.

While Solutions may have anywhere from a few Environments to more than a dozen, most have only two or three different Releases in flight at any given time. Each Release organizes changes to control what type of change is allowed to occur, when it is allowed, and where it is allowed. Generally, all Solutions have a Production Release. This is what users access to carry out business operations. Similarly, most also have a Build Release, where all changes initially occur. Of course, many also have a Candidate Release, a relatively steady state that follows the Build Release, and is a precursor of the future Production Release.

How Release Advancement Relates to Environments

Each medium-term Release increment has a specific identifier, such as 'YY.xx' to indicate Release's target year and sequential version during that year. This value segments one version from another. During the period of each Release Cycle, Release advancement allows more general descriptors to distinguish one version from the next. Indeed, every version, or specific Release, advances through each general Release as the changes it organizes advance towards Production deployment.

To be sure, every Environment contains only one version of the Solution. To rephrase it, it is not possible for multiple Releases to exist in the same Environment at the same time. As a result, understanding the Release in each Environment is the first of several effective means by which to segment and organize testing. Moreover, its degree of advancement within the Release Cycle - Build, Candidate, or Production - is also the best indicator of what Test Types are appropriate for each Environment at any point in time.

How Environments Relate to Testing

Recognizing a Solution has just two or three versions advancing at any given time, it becomes easy to see how each Release increment is made available for various purposes to developers, testers, QA, end-users, and others. Basically, it is just a matter of deploying the correct version to the desired Environment.

To be clear, any one Environment cannot contain more than one Release at any given time. However, the same Environment can contain different Releases as they advance at different times. For instance, a QA Environment may contain a Candidate Release for some testing. Afterwards, it may contain the planned Production Release for final testing before Production deployment.

Similarly, over time the same QA Environment can contain the Candidate for each version as it advances towards Production deployment. Accordingly, just as Releases offer an ability to generalize for easier management and control, so too can individual Environments also offer the ability to generalize for common, recurring planning and use by relevant stakeholders.

In short, a Test Target is a combination of a general Release deployed to a single Environment. To clarify, at any given time, the Release + Environment refer to one specific Release, and the contents thereof, at some stage in their advancement towards end-user access.

Solutions should direct the following tests to an appropriate Test Target.

Build Tests

Build Tests are those which occur during the Sprints which make up a given Build-Release. Test Cycles within each Sprint should include:

- Unit Testing

- Functional Testing and

- Integration Testing with a Story / Sprint scope, as well as expanding Feature / Release scope.

Delivery Team members develop and execute Components for all three Test Types as part of their efforts to take Stories from "Ready" to "Done".

Build Test Cycles are when Delivery Teams create the majority of Test Cases, Test Scenarios and Test Data Sets.

Certification Tests

Once the final Sprint of a Build-Release ends, DevSecOps create a new Candidate Release which includes 'Accepted' changes. In other words, only those Features which are "Done". Thereafter, the Candidate Release undergoes Test Cycles for:

- Integration Testing with a Release / Solution scope

- System Testing; and, if appropriate

- NFR Testing.

Usually, QA members, not the Delivery Team, create and execute Certification Tests to validate that the Candidate Release is suitable for Production deployment.

Certification Test Cycles build upon and reuse many of the existing Test Components. However, given the larger scope involved, QA must also create additional components.

Rollout Tests

Rollout Tests occur just prior to Production deployment. Analogous to a Candidate's creation, some Features may not be 'Accepted' after failing Certification. As a result, DevSecOps either Toggles-Off applicable Features (preferably) or removes functionality from the Candidate-Release (less preferable). The result is the next Production-Release.

The final Test Cycle involves:

SMEs, Business / Process Owners, or individuals they designate, execute UAT. They may produce their own Test Components, or reuse those prepared for earlier tests. Although, UAT should never test anything that was not already covered by System Tests. To emphasize, UAT should not evaluate any functionality not previously passed through prior Test Cycles.

Environment Tests

Finally, a small set of Test Types allows Testers to execute recurring validations against any Environment at any time. Typically, these tests seek to validate that changes made to some part of the Solution have not caused problems elsewhere, in areas which appear unaffected by such change.

These Test Cycles include:

Environment Tests may be executed against any environment containing a Build-Release, Candidate-Release, or Production-Release. Because execution of these Test Cycles will occur often, automating these tests is a good investment.

Section 3 - Testing Sequencing

Time is the limiting factor for test cycles. If it takes longer to validate an increment than it does to produce one, then Solutions wind up with an ever-increasing backlog of increments to validate. Moreover, at any given time they can wind up with way more than three versions still in progress. As a result, the need for post-Build testing largely drives the number of Sprints to include in Releases.

For instance, if it takes two months to run all tests on a Candidate-Release, then include at least 4 Sprints (4 x 2 weeks = 8 weeks) in each Build-Release to avoid the scenario where Builds occur more often than Certifications. If all Candidate testing can conclude in one month, then perhaps include just 2 Sprints in each Release. Conversely, Releases should not include more than 6 Sprints.

Thus, if more than three months is required to validate an increment, consider adding additional resources to shorten the timeframe to under 3 months. Alternatively, look to reduce the amount or type of changes made during the Sprints of each Release. Solutions which include Candidate-Releases should start with a larger number of Sprints (4 - 6) and fewer Releases per year.

Over time, as stakeholders become more efficient in testing, stakeholders may consider reducing the number of Sprints in each Release. However, this change may occur only at the start of a latter Phase. Indeed, the start of a new Phase is the correct time to update this Approach, and all other Solution Strategy & Architecture materials, to reflect such changes.

Parallel Build of Solution and Tests

An iterative approach facilitates testing at appropriate times throughout the delivery cycles. For instance, Testers direct smaller scope tests, using controlled data, towards each new Build Release. Afterwards, Testers direct larger scope tests, using uncontrolled data, towards a Candidate Release.

Different Test Targets help focus and manage overall test efforts. A Release can exist in multiple Environments, providing additional Targets and flexibility to orchestrate more tests into smaller timeframes. That is, Environments allow many tests to occur in parallel rather than in series.

To clarify, all tests for a change do not occur in parallel. Rather, Test Targets allow Functional Testing of some changes to occur at the same time as Integration Testing of other changes, and System Testing of yet more changes. In effect, this approach creates an almost continuous production-line of testing.

Generally, the development and execution of most tests should follow a consistent sequence. Although, it is critical to avoid gaps in this sequence. To emphasize, this approach works only when each Test Type fulfills its role in the broader testing picture.

From a development perspective, the sequence applies the concept of reuse. That is, the ability to take existing Test Components and merely extending them, or cloning and modifying them, rather than recreating components from scratch.

From an execution perspective, the sequence applies the concept of building trust and confidence layer upon layer. That is, using lower-level tests to establish a foundation which higher-level tests build upon, without the need to repeat earlier validations.

Together, Test Stages, Test Targets, and Test Sequencing facilitate significant reuse among Test Components and Test Types. Indeed, maximizing reuse is critical if testing is to have any hope of achieving its objectives without impeding the delivery process.

Test Development & Execution Sequence

The first tests to develop are the Build Tests. Basically, these are tests which relate to validating the Build Release. To begin, create the following tests in the order presented.

Unit Tests - UNT

When appropriate for a Solution, create Unit Test components in parallel with the changes being made. Generally, these apply to the creation or modification of any RICE objects - Reports, Integrations, Conversions or Extensions.

Depending upon the Tools in use, these tests may reside in a development tool rather than the Test Management System (TMS). Accordingly, reuse of Unit Test components may apply more to other Unit Tests, rather than other Test Types.

Unit Test planning, development, execution and the reporting of their results is usually the responsibility of a Team's Solution Analyst. Identify Unit Test Components using the 'UNT' identifier to distinguish them from other components.

Functional Tests - FNC

Develop Functional Test components either prior to, or in parallel with, the change(s) which they validate.

Just as increments of functionality should become available for testing multiple times per day, Teams should produce and execute corresponding increments of the Functional Test(s) multiple times per day. Functional Test results feed back to those making the change to guide further development or corrections.

Planning, development, execution, and reporting of Functional Tests is usually the responsibility of a Team's Test Analyst. Although, any Team member may assist and support the development and execution of Functional Tests. Identify Functional Test Components using the 'FNC' identifier to distinguish them from other components.

Integration Tests - INT (Build)

Derive Integration Tests from corresponding Functional Tests. That is, copy / clone Functional Test Components and then modify each to suit new objectives.

Scope of these Integration Tests is typically limited to the changes which occur during the Sprint / Build-Release. That is, Build-level Integration Tests do not seek to validate a series of functions beyond those affected within the current Build-Release.

Integration Test planning and development is usually the responsibly of a Team's Test Analyst, while any (or all) Team members may execute tests and report results. Identify Integration Test Components using the 'INT' identifier to distinguish them from other components.

The next set of tests to develop are the Certification Tests. In short, these are tests which relate to validating the Candidate Release. To continue, create the following tests in the order presented.

Integration Tests - INT (Candidate)

An extended set of Integration Tests seek to validate changes within a Candidate-Release. In effect, these tests further validate new functionality against changes made in all prior Production-Releases. In other words, these seek to verify that all new changes work in concert with all previously existing functionality.

Basically, compile these tests from existing Build-level Integration Test components, then extended where needed to cover broader sections of scope. Additionally, the focus of these tests is less about validating individual changes, and more about validating the flow of data throughout the Solution.

Ideally, planning, development, execution and reporting of these Integration Tests is the responsibility of a QA group. Otherwise, individual Teams must create and execute these tests. Continue to use the 'INT' identifier for these components.

System Tests - SYS

All prior tests used controlled data to trigger specific events and errors. Moreover, they sought to isolate each of the three basic change types - Configuration, RICE Objects, and Operational Data - to independently validate each change in detail.

At this point, Testers begin using uncontrolled data. Introducing this variable in System Tests continues to validate that all changes properly interact with one another to enable Production-like transactions to flow through the system.

Derive System Test components from corresponding Integration Tests. In many cases, the only difference should be the Test Data Sets used.

QA plans and develops System Tests. Alternatively, Teams must pick up these tests if no independent QA group is used. Execution begins with those developing the tests. However, these are the first tests which must also engage Customers or future users. Identify System Test Components using the 'SYS' identifier to distinguish them from other components.

NFR Tests - NFR

For Solutions which require them, Non-Functional Tests occur in conjunction with System Tests. After certain functionality passes initial System Testing, it may then undergo further validation for things like performance, scalability, and localization.

It is important that stakeholders recognize that many NFR Tests are some of the largest, most complex, and expensive tests to design, build, and execute. As a result, they cannot address all possible scenarios. Stakeholders must be judicious in choosing which tests are most relevant and cost effective.

Derive most NFR Tests from Integration Tests and/or System Tests. It is not uncommon for NFR Tests to combine both controlled and uncontrolled data to create desired data volumes.

In some cases, QA is responsible for NFR Tests. Although, many organizations use special groups or individuals dedicated to this type of testing. Identify Non-Functional Requirements Test Components using the 'NFR' identifier to distinguish them from other components.

If the Solution does not use a Candidate Release, then Testers must find the resources to develop and execute Certification Tests using the Build Release. To emphasize, Releases require Certification Testing, regardless of whether a Solution uses a Candidate Release or not.

The next set of tests to develop are the Rollout Tests, those which relate to validating the Production Release.

The final tests to develop are the Environment Tests, which validate any Environment, regardless of which Release it contains.

User Acceptance Tests - UAT

Basically, User Acceptance Tests validate that resolutions to any errors raised by prior Test Types are to the Customer's satisfaction. Alternatively, errors may still exist, provided an acceptable work around exists. In other words, these tests allow Customers to determine whether a Candidate-Release may progress to Production "as is", or not.

UAT is a short cycle, lasting from a few hours to a few days. It should not be a lengthy cycle that repeats System Test. This is often quite different from other approaches. However, maintaining Sprint and Release time constraints allows for little redundancy.

Users may derive UAT components from System Tests or Integration Tests. Significantly, UAT should never introduce new tests. That is, Users are not free to invent new scenarios which prior testing did not cover. Indeed, the time to raise such scenarios was during Requirements Analysis. Alternatively, during Integration or System Testing. To be sure, UAT is not the time to introduce new requirements. Identify User Acceptance Test Components using the 'UAT' identifier to distinguish them from other components.

Smoke Tests - SMK

In most cases, Smoke Tests evolve as Integration Tests grow to cover new features and functionality.

Initially, Smoke testing will be largely ad hoc and manual. In some cases, the DevSecOps team creating or deploying to the Environment will execute these tests prior to making the Environment available for use. Alternatively, a QA group may also take ownership of these tests to provide an independent check and balance.

Over time, Solutions should seek to automate these tests. Environment creation, refreshes, and (re)deployments will be common occurrences over a Solution's lifecycle. Identify Smoke Test Components using the 'SMK' identifier to distinguish them from other components.

Regression Tests - RGR

Likewise, Regression Tests also evolve as Integration Tests grow to cover new features and functionality.

Because of their recurring nature, automation of Regression Tests should occur from the start. If they are not automated, then they will either not occur, not cover much, cause more problems than they prevent, or consume an

In some cases, DevSecOps or QA may create and manage these tests. Alternatively, either may supervise an individual or team who specialize in automating tests. In this case, these same folks typically automate the Smoke Tests as well. Identify Regression Test Components using the 'RGR' identifier to distinguish them from other components.

Section 4 - Testing Summary

This section summarizes relevant considerations which affect testing setup.

Use Table SA6.1 to help estimate resources - infrastructure, staffing, funding, etc. - as well as timing applicable to Testing. For additional information on the related Testing Tools or Test Types applicable for this Solution, refer to the Testing Strategy.

Backlog Testing Features

Unlike each of the other Approaches in the Solution Strategy & Architecture Series, Testing in general does not add specific Features to the Solution Backlog.

Although, the testing expected of various changes, as outlined above, must factor into the estimate of each Feature's size. Feature estimates should include both the effort to make a change, as well as the Build Tests such a change requires.

If this Solution uses a Candidate Release, then all Feature estimates need only include efforts to create and execute relevant Build Tests. Conversely, if the Solution does not use a Candidate Release, then all Feature estimates should include the effort to create and execute both Build Tests as well as relevant Certification Tests.

Tables Values to Compile

Table SA6.1 compiles relevant testing drivers for the Solution. It lists the following:

- Testing Consideration: A question which affects some aspects of testing.

- For this Solution: A Yes / No answer to the question posed.

Table SA6.1: [Solution ID / Name] - Testing Drivers

| Testing Considerations | For this Solution |

|---|---|

| Will this Solution use an independent Quality Assurance (QA) Group? | Yes / No |

| Will this Solution use a Candidate Release? | Yes / No |

| Will this Solution allow bug fixes to a Candidate Release? | Yes / No |

| Will this Solution allow hot fixes to a Production Release? | Yes / No |

| Will Environment refreshes have the option to purge transactional and other select data? | Yes / No |

| Will controlled data and uncontrolled data be mixed in the same Environment at the same time? | Yes / No |

Section 5 - Approach per Solution Evolution Phase

The final section of this Approach defines which Tests to expect during each Solution Phase. Previously, Table SA6.1 - Testing Summary established considerations which affect testing.

Testing by Solution Phase

At this point, tables in this section capture decisions that result from those earlier estimates and related resource planning. As a result, they also tie to the Solution Roadmap. Moreover, rows in each table ensure alignment of individual Tests to the overall Testing Strategy.

Unlike other Approaches, this one does not add specific Features to the WMS. Hence, rows do not indicate a WMS Key.

Table Values to Compile

The header for each Table is 'Phase [Phase ID]: [Phase Name] - Testing'. For each Phase, the values to compile in each table include:

- Test Type: From the Testing Strategy, an identifier for a specific type of test.

- Test Sequence: a general indicator of the order in which each test should occur.

- Target Release: The general Release to which the type of test applies.

- Target Environment: From the Operations Approach, the Environment in which to execute the test.

- Design Pattern: Also from the Testing Strategy, the pattern, and hence the Tool, which this test will use.

- Group Responsible: Basically, whether individual Teams or an independent QA Group will own and manage these tests.

Table SA6.2: [Solution Phase ID / Name] - Testing

| Test Type | Test Sequence | Target Release | Target Environment(s) | Design Pattern(s) | Group Responsible |

|---|---|---|---|---|---|

| Unit Test | 1 | Build | Local BLD-DEV | TBD | Team |

| Functional Test | 2 | Build | BLD-DEV | TBD | Team |

| Integration Test | 3 | Build | BLD-TST | TBD | Team |

| Integration Test | 4 | Candidate | CDT-TST | TBD | QA |

| System Test | 5 | Candidate | CDT-QA | TBD | QA |

| NFR Testing | 6 | Candidate | CDT-QA | TBD | Automation |

| UAT Testing | 7 | Production | CDT-QA | TBD | QA |

| Smoke Testing | 8 | All | All | TBD | QA |

| Regression Testing | 9 | Any | Any | TBD | QA |

| Functional Test - Bug Fix | 10 | Candidate | CDT-DEV | TBD | Team |

| Functional Test - Hot Fix | 11 | Production | PRD-DEV | TBD | Team |

| Integration Test - Hot Fix | 12 | Production | PRD-DEV | TBD | Team |

| System Test - Hot Fix | 13 | Production | PRD-QA | TBD | QA |

| UAT Test - Hot Fix | 14 | Production | PRD-QA | TBD | QA |

Where to Execute Tests

The Environments described below must align with those which the Operations Approach defines.

Build Testing

Build Release Development Environment (BLD-DEV) is where Unit Testing and Functional Testing of new changes occur. This environment may be refreshed by developers at any time, allowing changes to be deployed and validation to begin multiple times per day.

Build Release Test Environment (BLD-TST) is where Build-level Integration Testing occurs. Build-level testing refers to limiting scope to changes which are being made within the current Release. DevSecOps promotes validated changes from BLD-DEV on a daily or twice-daily schedule. In other words, the promote only changes which pass Unit & Functional Testing to this environment, leaving it relatively clean for test efforts.

Certification Testing

Candidate Release Development Environment (CDT-DEV) is where Unit & Functional Testing of Candidate Release Bug fixes occurs.

Candidate Release Test Environment (CDT-TST) is where Candidate-level Integration Testing occurs. Candidate-level refers to extending scope to include all changes made within the Release, plus how those changes work with any changes made in prior Releases. In most cases, this is the first Test Type to be executed on a Candidate.

Candidate Release QA Environment (CDT-QA) is where System and NFR testing occurs. This environment is typically a copy of Production, with Solution infrastructure and Solution Components mirroring those of the Production.

Rollout Testing

UAT is also conducted in the Candidate Release QA Environment (CDT-QA).

Based on results from the prior Test Types, decisions are made whether to allow each Feature to progress to Production:

- Toggled-On;

- Toggled-Off; or

- Not at all.

DevSecOps will make a final rebuild of the Candidate Release taking these decisions into account.

User Acceptance is then sought that the resulting Production Release is acceptable for Move to Production.

End Template /

Optionally, include the following Checks & Balances.

Checks & Balances - Alignment to Other ITM Artifacts

This section is available in many Solution Strategy & Architecture artifacts, to help those who create, and use, this information see the "bigger picture".

Checks & Balances highlight where similar information appears in multiple locations. The reason to display info more than once is to help ensure alignment and consistency across all stakeholders. Each appearance offers a different perspective for a different audience. The more widely available such information becomes, the more likely it is to drive common understanding. As a result, this helps initiatives to progress more quickly and with fewer impediments.

Section 1 - Testing Stages

Section 1 offers an overview of the stages through which all tests progress. Stage 1: Requirements Analysis, corresponds to the definition of Acceptance Criteria. For Features, Teams define Acceptance Criteria during Feature Analysis & Design. For Stories and Bugs, Teams define Acceptance Criteria during Story Analysis & Design. To clarify, Requirements Analysis occurs prior to any Feature or Story becoming 'Ready'. Moreover, while analysis occurs in parallel to Sprints and Releases, the work to make a Feature or Story 'Ready' does not occur within a Sprint or Release timeframe. In other words, there are no time bounds on the analysis for each Feature and Story.

Conversely, all other stages occur in relation to each Sprint and/or Release iteration. These stages correspond to taking a Feature or Story from 'Ready' to 'Done'. Some Test Cycles occur each Sprint, while others cycles relate to each Release. No Test Cycle applies to the Phase in which each Release and Sprint occur. Appropriate tests must have acceptable results for each Story or Bug to become 'Done' by Sprint's end, as well as for each Feature to become 'Done' by the end of the Release's Last Sprint. Alternatively, any Feature or Story without acceptable results returns for further work during a subsequent iteration.

Section 2 - Testing Targets

Section 2 ties testing to the short-term, medium-term, and long-term iterations which organize the Solution's timeframes. Specifically, it describes how medium-term Releases are the primary means by which to plan, organize, and manage testing. Although, there is additional planning, organizing, and managing of various tests during each Sprint.

Moreover, this section introduces the Build Release, Candidate Release, and Production Release concepts. Each of these general terms represents a specific version of the Solution over time, as the changes which each Release organizes advance towards end-user access. The Operations Approach defines the Environments to which a Solution may deploy one of these general Releases.

To help guide testing, this section also groups related Test Types to target certain types of validation as changes progress towards Production deployment. These groups - Build Tests, Certification Tests, and Rollout Tests - facilitate definition of when to conduct tests, where to conduct tests, and who has responsibility and ownership of such tests.

Section 3 - Testing Sequence

Section 3 outlines the basic test production and execution. In short, it describes how different Test Types relate to one another. Using the various types, in the proper order, builds a strong foundation of trust in the Solution.

Generally, initial test development and execution which occurs during Sprints is a key part of Story Build. In other words, appropriate testing represents significant steps in taking every Story from 'Ready' to 'Done'.

Likewise, different test development and execution which occurs throughout a Release is a key part of Feature Release. Indeed, after Sprints, additional validation allows changes to progress from the Build Release to the Candidate Release, and on to Production.

Section 4 - Testing Summary

Unlike in other Approaches, this Summary section does not add Features to the Solution Backlog.

Table SA6.1 - [Solution ID / Name] - Testing Drivers

Rather than identify values which appear in other ITM artifacts, Table SA6.1 poses a short list of questions. While the questions may appear relatively straightforward, their answers have significant impacts on resource planning, change control processes, and Operations.

For instance, what appears as a simple Yes / No questions about bug or hot fixes can require quite a bit of additional setup to facilitate the ability to make fixes properly. Likewise, decisions about data purging, or mixing controlled and uncontrolled data have big impacts on test planning and execution.

Section 5- Approach per Solution Phase

As with the prior section, this Approach does not manage expectations for the delivery of any specific Feature.

Table SA6.2 - [Solution ID / Name] - Testing

Rather, Table SA6.2 consolidates many test-related decisions into a concise list of testing characteristics. Accordingly, the rows in this table should provide a comprehensive list of all testing which relates to this Solution.

How to Develop a Testing Approach

In most cases, a Solution Architect or Application / Technology Architect facilitates the creation or update of this Approach. However, they should do so in collaboration with Epic Owners as well as relevant Business / Process Owners. Additionally, if the Solution will use a QA Group, their involvement is a must.

To create the initial version, use the preceding template content on this page. Otherwise, to update this Approach for a subsequent Phase, use the most recent version from a preceding Phase. Thereafter, follow the steps below to create, or update a Solution's Testing Approach.

Regardless of which Phase the artifact is for, completion of the current version occurs during Phase Inception. At that time, the long-term scope of the Phase, including what testing to anticipate, is set. As a result, the finalized Approach per Solution Evolution Phase section defines the scope of Testing for the next Phase.

Section 1 - Testing Stages

Section 1 is entirely template material. For the most part, it is relatively basic information and common sense. There is no need for any changes if the content is suitable for this Solution. Look to the ITM section on Test Stages for additional information. If appropriate, then copy relevant portions here.

Conversely, if the content is not applicable or appropriate, then edit to suit this Solution. Although, recognize that whatever remains must be sufficient for stakeholders to build a sense of trust in the resulting Solution and any changes to it. Otherwise, delays and extra costs will mount, reducing ROI.

Section 2 - Testing Targets

Similarly, Section 2 is also entirely template material. Look to the ITM section on Test Targets for additional information. If appropriate, then copy relevant portions to here. Ensure the Test Types listed correspond to the applicable Tests Types defined by the Testing Strategy.

The main takeaway is that Test Cycles must be ongoing and relatively small. Moreover, any attempts to defer testing until Phase end implies the Solution is following a waterfall approach rather than an iterative approach.

All stakeholders must recognize that the development of Test Components and execution of relevant Tests consumes a much larger portion of resources than does making changes themselves. Yet these must occur for any change to be 'Done'.

Section 3 - Testing Sequence

As above, Section 3 is pretty much template material. Look to the ITM section on Test Sequencing for additional information. If appropriate, then copy relevant portions here. Although, the contents in this section must align to the applicable Test Types as defined by the Testing Strategy.

In this case, the main takeaway is that test development and execution must design for reuse. As stated above, testing consumes more resources than the changes it validates. As a result, if the design, development, and execution of testing is not both efficient and effective, then multiple problems arise.

Firstly, an inability to test sufficiently and effectively will lead to diminished stakeholders' trust in the Solution. Secondly, an inability to test quickly and efficiently leads to a growing backlog of validations. Both problems prevent changes from progressing towards end-user benefit. As a result, there are a lot of costs, with little benefit, and little to no Solution ROI.

Section 4 - Testing Summary

At this point, it is time to summarize the information compiled above into a relatively short list for stakeholder reference.

Step 1: Determine Testing Conditions - Create / Update Table SA6.1

Complete Table SA6.1 by answering several key questions which relate to Testing Considerations. The answers to each question affect multiple iterative aspects of this Solution, including Planning, Implementation, Testing, and Reporting.

The following are brief explanations of the impacts each question has upon the Solution. Feel free to add questions, as well as a description of the impact of each, to this list. Indeed, doing so will help refine the Testing of this Solution within your organization.

Testing Consideration Impacts

QA Involvement

If using a QA Group, then check to see if they require their own, independent Environment(s) for testing. Certification tests are best conducted in Environments separate from ongoing Build activities.

Candidate Release

If using a Candidate Release, then plan for Environment(s) in which to deploy each Release as it advances to become a candidate. A Build Release and Candidate Release cannot coexist in the same Environment at the same time. Separate Environments for a Candidate Release also address the need for an independent QA Environment(s) (see previous). Alternatively, each could share the same Environment, but at different times. This may be appropriate when no QA group is involved, and Teams must perform Certification Testing themselves.

Bug Fixes

If the Solution allows Bug fixes to a Candidate Release, then it should consider where those changes originate. They should not originate in a Build Release Environment, because other work-in-progress which may affect the fix is not present in the Candidate. Similarly, if QA wants independent Environments, then changes should not occur where Certification Testing is conducted. Establishing a small CDT-DEV and/or CDT-TST Environment for the Candidate Release is often the best approach.

Note: Those making bug fixes must also port the changes back to Build development to ensure the fix's inclusion in all future Releases.

Production Hot Fixes

If the Solution allows hot fixes to a Production Release, then it should consider where those changes originate. They should not originate in a Build or Candidate Release Environment, because other changes may affect results. Solutions could temporarily replace an existing Build or Candidate Environment with a Production Release until the fix is complete. Although, this will disrupt much other work. Establishing a small PRD-DEV and/or PRD-TST Environment for the Production Release is often the best approach.

Note: Those making any Hot Fixes must also port the changes back to Build development to ensure the fix's inclusion in all future Releases. Likewise, they must also port the changes back to Candidate development to ensure the fix's inclusion in the next Release.

Data Purges with Refresh

A refresh is what occurs when one Release replaces another in an Environment. When this occurs, if there is an option to remove transactional and other data, then the design of Test Components may consider a degree of reuse within Environments. Conversely, if there is no option to purge data, then the design of Test Components must consider many would require modification prior to each use.

Note: An option to purge data implies the Solution must develop the necessary script(s) to affect proper purging. This is not a feature of most delivered applications. In this case, add Feature(s) to the Solution Backlog to produce the required script(s).

Blending Controlled and Uncontrolled Data

If both controlled data and uncontrolled data will both be used in the same Environment at the same time, then the design of Test Components must identify means by which to differentiate the two. Otherwise, one data type runs the risk of corrupting the other, as well as the tests which relate to each type. Testers must look to things like primary keys, the segmentation of Operational Data, and other means by which to identify what data is controlled and what is not.

Section 5 - Approach per Solution Evolution Phase

Complete this final section of this Approach during Phase Inception, when the juggling of what's in, what's out, and what's next occurs. Of course, a preliminary recommendation, or draft, of the Testing to include in any Phase is helpful.

Before updating Table SA6.2, it can be helpful to produce one or more diagrams depicting the Environments this Solution will use during the Phase, along with notes about what activities occur within each. Specifically, it can be helpful to identify the Test Types per Release Type.

Produce these diagrams in coordination with development of the Operations Approach, which defines the Environments the Solution plans to use during the next Phase. Afterwards, summarizing the diagram's information into the table becomes quite simple.

However, finalizing Table SA6.2 depends upon decisions made for other Approach artifacts, such as the Application Approach. Moreover, it also depends on practical considerations, such as resource constraints. As a result, finalizing such items is an output of each Phase's Inception.

Unlike other Approach artifacts, this one does not identify Features for delivery in the pending Phase(s). Rather, this Testing Approach summarizes the extent of testing to occur over the next Phase. Accordingly, preparation of this table must consider the full scope of test types, their sequencing, where their execution will occur and by whom.

Indeed, of all the Approach artifacts this may be the most difficult to complete. Building upon the information in the Testing Strategy, the additional information earlier in this Approach, and answers from Table SA6.1, it is time to define how Testing will occur during the next Solution Phase. Although, once defined for one Phase, this Approach becomes much easier to complete for subsequent Phases.

Envisioning Multiple Levels of Testing

To help organize thoughts on Testing, it is a good idea to think about which tests occur over various levels of Solution Delivery. Look to Test Levels & Progression for additional information. If appropriate, then copy relevant portions to earlier sections of this Testing Approach to help stakeholders better understand testing implications.

Test Levels

Grouping tests by levels helps focus attention on specific the types of testing which are appropriate at designated points in the Solution's development cycle. Basically, Test Levels combine the concepts of Test Targets and Test Sequencing to provide a framework for Test Progression.

The use of Test Levels - Sprint-level, Build-level, Candidate-level, and Production-level - helps create foundations of quality and trust upon which subsequent testing, with different test objectives, can build. Although, it is important to understand the objective of each Test Type, and to ensure that when it's time for such testing that the testing be thorough, transparent, and sufficient to establish confidence and trust in the functionality under test. Trying to achieve the objective of one Test Type by using another type, or in the same time and place as another, is a bad idea.

Misusing Test Types creates conflicts, such as trying to use both controlled and uncontrolled data in the same place at the same time. As a result, conflicts often lead to a loss of stakeholder confidence in all testing. Of course, Teams can mitigate such conflicts, but doing so tends to require more work and more cost than simply segmenting the test cycles appropriately. Test Levels & Progression define a different perspective of how test Targets and Sequencing build trust and confidence in the Solution.

To emphasize, as each change progresses from idea through to Production deployment, different tests validate different aspects of the change. In parallel with configuration and development actions, this Approach defines tests to occur over the Sprint, Release and Phase timeframes. In other words, tests which validate short-term change, medium-term results, and long-term operations. Significantly, a proper design of each test allows one Test Type to build upon another, leading to a more stable, and viable, Solution.

Step 2: Define Approach for Phase - Create / Update Table SA6.2

To begin, look to Section 2 in the Application Strategy for the current list of Solution Evolution Phases. For each Phase, create a Table SA6.2. Give each header a unique, one-letter identifier, such as Table SA6.2(a), Table SA6.2(b), Table, SA6.2(c), and so on. Be sure to include the Solution Phase ID / Name in each header.

Secondly, look to the Testing Strategy for the list of Test Types which apply to this Solution. Add each Test Type which appears in Table SS6.1 to a row in Table SA6.2. Thirdly, identify the Target Release against which to execute each Test Type. Most types execute against a single target. However, some may execute against multiple targets.

Fourthly, identify the Target Environment in which to execute each Test Type. Ensure the Operations Approach confirms the Target Release - Build, Candidate, or Production - belongs in the Target Environment. Fifthly, identify the Test Sequence in which to execute each Test Type. The result should sort Target Release and Target Environments to generally reflect work and test progression.

Sixthly, again look to the Testing Strategy. If there is a recommended, or preferred Testing Scenario for a given Test Type + Target Environment, then identify the corresponding Design Pattern which the tests should apply. If there is no appropriate pattern, revisit the Strategy to identify a Tool and/or Service suitable to conducting the required testing.

Finally, identify the Team or Group who has primary ownership for developing and executing each Test Type for each Target Environment. Note, ownership does limit participation. For instance, while representatives of the User Community should participate in System Testing and UAT, they should own neither. Rather, the owners of each Test Type are responsible for managing participation.