Data Migration Approach

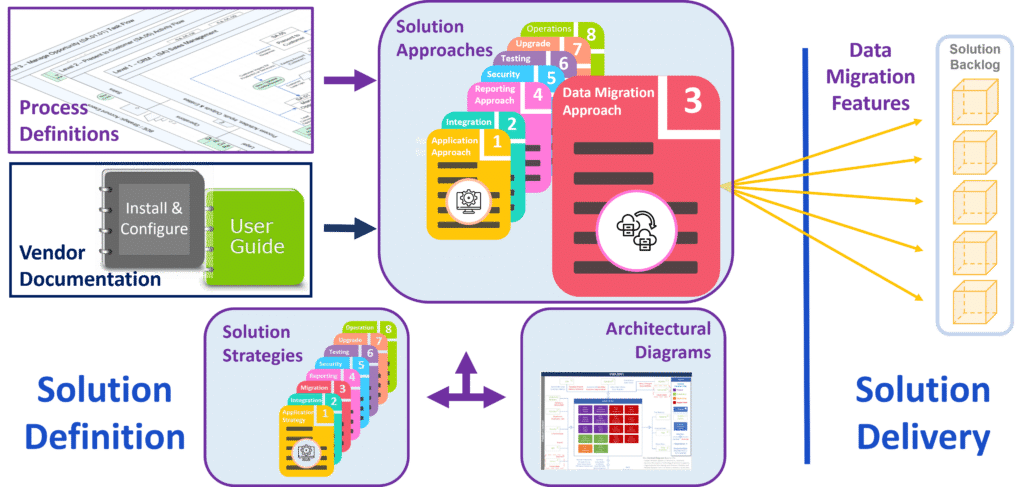

The third of eight (3 of 8) artifacts within the Solution Approach series is the Data Migration Approach. Basically, this approach connects the higher-level guidance of the Data Migration Strategy with corresponding Solution Delivery content. Additionally, it offers guidance for the design and development of individual Migrations.

In effect, each Migration into the Solution appears as its own Feature in the Solution Backlog. All Migration Features, along with their corresponding Stories, relate to the Solution's Data Migration Epic. This one Epic organizes all Data Migrations from legacy systems into the Solution.

To begin, use a copy of the upper portion of this page as the basis, or template, for this Data Migration Approach. Thereafter, following the 'How to Develop the Data Migration Approach' steps in the lower portion to complete this table-driven (i.e., fill in the blanks) artifact for a specific Solution.

Begin Template

Solution Approach 3 of 8 - Data Migrations

The series of artifacts outlining an Approach for this Solution continues with Data Migrations. Basically, each Approach bridges a gap between the guidelines and guardrails found in its corresponding, higher-level Strategy, and the subsequent execution of iterative Solution Delivery.

By working through this Data Migration Approach, an initiative defines data to bring forward from legacy systems into this Solution. Consequently, this helps build the Solution Backlog. Thereafter, subsequent Solution Delivery efforts implement the functionality identified herein. This Approach builds upon that which appears in the corresponding Application Approach. Refer to that document for additional information.

Introduction

The Data Migration Approach describes concepts for porting existing data from legacy systems into the Solution. Capturing this information prior to the start of each Phase makes subsequent design and delivery work as productive as possible.

To be sure, beginning with a holistic view of Data Migration facilitates better segmentation of Solution Delivery work. Additionally, it allows for improved management of scope and risk, as well as clearer communication of work efforts. Moreover, it also facilitates reuse and consistency among Data Migrations components. As a result, it reduces the time and effort required to design & deliver individual Data Migrations, while also improving their quality.

Objective

The objective of this artifact is to identify Data Migrations, and to explain aspects common to each as they apply to the Solution. Furthermore, it explains how to apply guidelines described in the Data Migration Strategy to best suit the Solution's needs. Moreover, it also provides specific orientation on the design, build and use of Migrations which everyone involved in those activities should follow. Accordingly, individual Migrations need only reference most content included herein, rather than copying and including them within each Feature. Whereas individual Features describe items specific and unique the given Migration, this Data Migration Approach covers topics that should be consistent across several (or most) Migrations, and hence redundant if left to the individual Features.

Audience

This audience for the Approach includes Solution Managers Architects, Business / Process Owners and Team(s) who will design and build the actual Data Migrations. In general, this Data Migration Approach enables stakeholders to make initial estimates of migration-related work. As a result, they are better positioned to manage expectations and organize delivery Teams. For instance, a Solution with many Migrations might benefit by having one or more Teams dedicated to Migration design and development. Conversely, a Solution with few Migrations may find it easier to have Teams which focus upon specific functional areas, to also deal with any related Migrations. Regardless of the work division, anyone planning, designing, and developing Migrations will find this Approach offers them a valuable head start.

Sections Overview

To compile this Data Migration Approach, begin with the Process Definition(s) which the Solution supports. Within each, identify Data Objects and determine whether there is, or is not, a desire to port their content from one or more legacy systems. Thereafter, complete the tables within this Approach for each object which requires Migration.

Revisit the tables in this Approach prior to beginning each new Solution Phase.

The Approach seeks to summarize Data Migration-related information by:

- Identifying affected Business Process(es) or Technology Enabler(s) and their corresponding Data Stores, identifying those which will - and which will not - require Data Migration.

- Compiling suitable information for budgeting, resource planning and other high-level estimation of Data Migrations needs.

- Describing functions common to all (or many) Data Migrations.

- Identifying individual Data Migrations the Solution may require; and

- Defining which Data Migration(s) to deliver during each Solution Phase.

Section 1 - Business Impacts

This Data Migration Approach begins by describing the Business Process(es) or Technology Enabler(s), as appropriate, along with related Data Objects within the Solution's Future- or End State.

Processes / Enablers

Table SA3.1 lists the Business Process(es) or Technology Enabler(s) which the Solution supports. This table reflects information captured by Level 3 Diagrams for each Process. To compile this information, conduct a quick review of the completed Task Flow Diagrams for each Process.

All Processes / Enablers listed in the 'Future State' section of the Application Strategy should appear in this table. As a result, Table SA3.1 will make it clear to everyone where to expect Data Migrations, and where not. In other words, this table helps define both what is, and what is not, in scope.

For each Process (or Enabler), the table identifies relevant Data Objects, along with the assessment as to whether data will, or will not, require Migration from one or more legacy system(s).

In addition to the general Data Objects, this table also captures relevant Data In/Out items which may appear in the Process Definition. It is not common to include Migration-related Data In/Out items. However, if they do appear, then this is where to compile them. Basically, Data Objects and Data In/Out items offer two different perspectives to help identify potential Migrations. In most cases, Enablers lack similar definitions from which to identify these items.

Table Values to Compile

The table which compiles Process or Enabler information lists the following:

- Defined Business Process / Technology Enabler: Name of the Process or Enabler to which the Data Object(s) relate.

- Related Data Objects - without Migration: A bulleted list of Data Objects which relate to the given Process or Enabler, for which to expect no Migration(s).

- Related Data Objects - with Migration: A bulleted list of Data Objects related to the given Process / Enabler, for which to expect Data Migration(s).

- Related Data In/Out Items: If used, a bulleted list of Data In/Out items from the corresponding Process Definition Diagrams and/or Matrices.

- Notes: After identifying a Migration, use this field to capture any early information. Afterwards, once the corresponding Feature appears in the Solution Backlog, copy or move such information to the Feature's content.

Table SA3.1: Business Processes (or Technology Enablers) and Data Objects

| Defined Business Process | Related Data Objects - without Migration | Related Data Objects - with Migration | Data In/Out Items | Notes |

|---|---|---|---|---|

Data Objects & Stores

Table SA3.2 identifies the Solution Data Store(s) affected by the Migration of data into the Solution.

Give each Process / Enabler for which at least one Migration is expected its own table. Accordingly, create a header for each table which includes 'for [Process Name]' or 'for [Enabler Name]'.

Relating Process / Enabler to Migration(s) helps provide context. For instance, the summary each table provides is helpful in determining which Migration(s) to include within scope of a given Phase. If the objective of a Phase is to enable a certain Process, then including related Migrations is likely warranted. Moreover, this relationship also helps identify stakeholders to participate in subsequent Feature Planning.

In time, each individual Migration becomes a Feature to add to the Solution Backlog. When adding each Feature to the Work Management System (WMS) it will be given a unique ID. In the interim, Migration Title appears in the table instead. However, Table(s) SA3.2 do not list individual Migrations. That is, the same Title may appear multiple times.

Basically, this is because a given Migration may affect multiple Data Objects / Stores. Likewise, a Data In/Out item may imply the same Integration as a Data Object, just from a different perspective. Conversely, multiple Migrations may affect a given Data Object / Store. Accordingly, look to the 'Data Migrations Summary' part later in this Approach for a list of Migrations.

Table Values to Compile

For each Process or Enabler, the table which compiles Data Object information lists the following:

- Process / Enabler Data Object or Data In/Out Item: From Table SA3.1 above, the label given to each Data Object or Data In/Out item.

- Solution Data Store(s): Lists application or technology Data Store(s) (file, table, entity, etc.) which correspond to the Data Object. Note that a given Data Object may affect multiple Data Stores, or vice versa.

- High-Level Migration Description: A brief description of the desired Data Migration.

- Related Process(es) / Enabler(s): This provides a cross-reference because the same Data Object may relate to multiple Processes / Enablers. This field lists each of the other Processes / Enablers from Table SA3.1 to which the Data Object relates.

- Migration Title: A simple, unique identifier, typically in the form of

[Legacy System] to [Solution] - [Data Object].

If a Data Object row and Data In/Out item row refer to the same Migration, then give them the same Migration Title.

Table SA3.2(a): Affected Data Objects for [Process Name] [or Enabler Name]

| Process Data Object or Data In/Out Item | Solution Data Store | High-Level Migration Description | Related Process(es) | Migration Title |

|---|---|---|---|---|

Section 2 - System-Level Mappings

Building upon the prior Data Objects & Stores, this section defines field-level alignments across multiple systems. Generally, these maps help assess Migration complexity, align legacy and Solution data values, and ensure alignment across multiple Migrations.

By focusing on the Data Stores, rather than individual Source / Target systems, each table may map values across multiple systems into the same location. In effect, this highlights the need for any data translation and/or standardization, as the inputs from multiple Source systems to the same Data Store are unlikely to be translation free.

Furthermore, this exercise also helps identify where values from one system may collide with values from another. For instance, new Solutions often consolidate multiple legacy systems. When this occurs, Target repositories may have to deal with conflicting data values from multiple legacy systems. Accordingly, definition of which values belong in the Solution is best done upfront, rather than during design of individual Migrations.

Multi-System Data Maps

To be clear, Table(s) SA3.3 do not provide the mappings for individual Migrations. Rather, these maps are system-to-system level, and may cover mappings which appear in multiple Migrations. To see actual field-level mappings of individual Migrations, refer to each Migration's corresponding Feature.

Table Values to Compile

Tables SA3.3 lists the field-level mappings from Legacy systems to a Solution Data Store. Each table lists the following:

- Source System: name of the legacy system from which to migrate data.

- Source Table: name of Table / Repository / API from which to send data.

- Source Field: name of the Field within the Source Table from which to send data.

- Source Data Type: the type of data represented by the Source Field.

- Target System: the name of the Solution to receive migrated data.

- Target Table: name of the Table / Repository / API to receive migrated data.

- Target Field: the name of the Field within the Target Table to place corresponding Source Field data.

- Target Data Type: the type of data represented by the Target Field.

- Translation Logic: A description of how to translate Field values between Source and Target. This is not the actual code / logic itself, merely a description of what is needed. Actual logic will be left for Stories enabling Data Migration Features.

Give each table the header '[Solution Data Store] System Mappings'. Create a header, and corresponding table, for each Data Store which appears in Table SA3.2 above.

Table SA3.3(a): [Data Store] System Mappings

| Source System | Source Table | Source Field | Source Data Type | Target System | Target Table | Target Field | Target Data Type | Translation Logic |

|---|---|---|---|---|---|---|---|---|

Section 3 - Data Migration Common Functions

This section of the Data Migration Approach describes functionality common across all Data Migrations. To begin, it defines standard Tasks to consider for each Migration Flow. Afterwards, it defines several types of Migration Business Rules each Migration must consider. Thereafter it provides a template for mapping data between Source (Legacy) and Target (Solution) systems. Finally, it covers several topics Teams should consider, and incorporate, into each Migration.

The topics in this section offer guidance to Teams, and Team members, who may not have much experience designing and developing Migrations. Moreover, they seek to help standardize all Migrations, regardless of which Team produces them. In some cases, they also offer opportunities for stakeholders to work through potential migration challenges up front. For instance, adding content to some tables in this section can be particularly helpful for any Solution which consolidates multiple legacy systems.

At this point, the Tasks and Rules are meant to guide subsequent Migration design development. Of course, each Migration is likely to build out additional Rules, specific to its needs. Look to the Migration Feature, and corresponding Stories, for such information. Additionally, if available, Teams should refer to the Development Standards - Data Migrations.

Data Migration Flow

Features represent each individual Migration. In other words, each [Source] to [Target] - [Data Object] has its own, specific Feature in the Solution backlog. The scope of each Feature consists of the six (6) major Tasks described below. Of course, the Tasks shown provide a template, or example, for Teams to follow when defining each Feature. Individual Solutions may alter these Tasks to suit their needs. However, they must still address these basic concepts.

In most cases, a Story represents each Task. That is, for each Migration Feature, there should be at least one Story for each Task in the Solution Backlog. Although, multiple Stories per Task is also OK. In some cases, a given Task may not apply. For instance, a Migration of Vendor data which requires no value translations. In this case, create a Story and explicitly state that the Task is not applicable to the Migration. As a result, this avoids confusion as to whether a Team overlooked some portion of the Migration. Teams should use the more detailed description of each Task, along with references to relevant Business Rules, for their definition of each Story.

|

Description

|

|

|---|---|

Task 1: Related Data Profiling |

To begin any Migration, conduct an analysis of the Data Object(s) within the legacy system. Generally, the objective is to identify relevant characteristics of the Source data. Afterwards, apply these characteristics to a Migration's design and corresponding tests. For instance, Data Profiling should provide results such as: record counts; field value frequencies; data types, and so on. These results should describe each field, or data element, to migrate into the Solution. |

Task 2: Mapping & Data Preparation |

Secondly, map legacy system data to Solution data. Of course, this must occur at field level in both Source & Target systems. Moreover, mapping often includes defining the various Business Rules.

Refer to the Data Migration Business Rules below. This is also where ETL designs (Tasks 3, 4, & 5) begin. Furthermore, this is the time to execute and Migration preparation, such as data cleansing or scrubbing. Refer to the Data Migration Mapping Template below. |

Task 3: Data Extract |

Thirdly, extract data from the Source system into a usable format, such as a Message, JSON, ASCII, CSV, DOC, PDF, etc. Accordingly, this is where to apply Data Selection Rules and Data Transfer Rules (see below). Additionally, include both Error and Audit reporting capabilities to provide historical reference for each execution.

Refer to both Error Handling and Audit Reporting below. |

|

Description

|

|

|---|---|

Task 4: Data Translation |

Fourthly, translate data from Source system form or format into forms and formats suitable to the Target system. Accordingly, this is where to apply Data Processing Rules and Data Translation Rules (see below). In some cases, Data Derivation Rules may apply here, if not better suited to Task 5. As with the prior Task, include separate Error and Audit reporting capabilities to provide historical reference of each execution. |

Task 5: Data Load |

Fifthly, upload the translated Source data into the Target tables, structures or repositories as appropriate. Accordingly, this is where to apply Data Derivation Rules and Data Load Rules (see below). As with the preceding Tasks, include separate Error and Audit reporting so an historical record remains following each execution.

If appropriate, Teams may combine Tasks 4 & 5 into a single component. In that case, follow the guidance above about creating a Story for applicable Tasks. |

Task 6: Validation |

Finally, validate that the transfer of Migration data is correct. Basically, this should encompass a combination of validations, which include:

|

Data Migration Business Rules

In this Data Migration Approach each Business Rule table layout includes just two columns:

- Rule ID: A unique ID in the form of XXXX_NNN. Each Rule Type has its own prefix for the ID to help organize and manage their use. Thereafter, NNN are just sequential numbers for each Rule Type.

- Rule Description: Defines what needs to occur to satisfy the rule.

In general, it is best to define rules before development and testing of a Migration. However, experience shows that Teams delivering a Migration often need to extend tables with additional rules - sometimes many - as design and testing progress. As a result, it is preferable to manage all rules of a given type, within a single Migration-related Task. To clarify, it is best to associate specific rule types, such as Transfer or Derivation, with a single task, such as Extract, Translate or Load. Mixing and matching rule types across tasks can introduce unnecessary complexity and challenges.

Data Selection Rules

This table compiles the Data Selection Rules which apply to the extract of data from the Source system.

|

Rule ID

|

Rule Description

|

|---|---|

| EXTR_001 | Remove duplicate Suppliers with the same Name and Address during extract from [Source System]. |

| EXTR_002 | Extract only Suppliers with a status of "ACTIVE" to the data file from <Source System>. |

| EXTR_nnn | Fields with no values are left blank. Do not zero or space fill. |

A Feature Page associated with each Data Migration is a good place to maintain these tables. Alternatively, rather than maintaining the list in the Collaboration Tool, Teams could maintain the tables within, or attached to, the Feature in the Work Management System.

Even though different rule types align to specific Migration Tasks, it is best to maintain all tables in one location. In other words, avoid placing rule tables into individual Stories. Rather, reference the location of the tables, such as the Feature Page or WMS Feature. in each Story. Having a single location to capture and review Business Rules will simplify rule management and help eliminate inconsistencies.

Each Rule Type below has its own unique ID to provide traceability. Accordingly, they are useful in both Error & Audit reports. That is, include Rule IDs when writing errors and audit records. Sample Rules appear below for each type. Replace these with common rules appropriate for the Solution, which can act as a template for when these tables are used in each Feature.

Data Processing Rules

This table compiles the Data Transfer Rules, which apply to the movement of extracted data. That is, to transmission of the data set generated by the Data Extract, from one system or location to another.

|

Rule ID

|

Rule Description

|

|---|---|

| XFER_001 | Give the operator an option to change in the Inbound Directory path specified by default in the Migration. |

| XFER_002 | Operator needs to ensure that the data file is moved to Inbound Directory path specified prior to running the Migration. |

| XFER_nnn |

Data Transfer Rules

This table compiles the Data Processing Rules, which govern the logic for analyzing Source data to produce related values suitable for loading into the Target system.

|

Rule ID

|

Rule Description

|

|---|---|

| PROC_001 | Perform standardization of Names and Addresses extracted from <Source System>. |

| PROC_002 | All Supplier Names shall be given in UPPERCASE only. In the event of not having uppercase for Supplier Name, Migration shall convert the data to UPPERCASE for <Target System> |

| PROC_nnn |

Data Translation Rules

This table compiles the Data Translation Rules, which apply to the translation of individual source data values into other values suitable for loading into the target system.

|

Rule ID

|

Rule Description

|

|---|---|

| XLAT_001 | Prepend an 'M' (for Migrated) to all Vendor numbers from Vendor Master File in <Source System> for use in <Target System> <Target Table> <Target Field> |

| XLAT_002 | |

| XLAT_nnn |

Data Derivation Rules

This table compiles the Data Derivation Rules, which use Source field values to derive new, suitable Target values. Derivation may involve segmenting, or merging, of Source fields to create new fields, or the use of multiple fields to derive a third.

|

Rule ID

|

Rule Description

|

|---|---|

| DERV_001 | Retrieve a sequence number from PO_VENDORS_S sequence that uniquely identifies each vendor and will be used to populate <Target Field> in <Target Table>. |

| DERV_002 | Supplier Site Name does not exist in current <Source System>. User needs to explain on deriving the Supplier Site Logic to convert data into <Target Table> <Target Field>. |

| DERV_nnn |

Data Load Rules

This table compiles the Data Load Rules, which apply to the loading of data into the Target system.

|

Rule ID

|

Rule Description

|

|---|---|

| LOAD_001 | Supplier Types should be configured (in Payables lookups) in the Solution prior to executing the Migration. |

| LOAD_002 | Pay Group defined in Payables should be same as in datafile. <Source System> does not contain Pay Groups. User needs to confirm for which Supplier Types, does specific Pay Group apply in the datafile. |

| LOAD_nnn |

This table is a catch-all for Other Rules that should apply to a Migration yet do not fit well into any of the Tables above.

|

Rule ID

|

Rule Description

|

|---|---|

| OTHR_001 | |

| OTHR_002 | |

| OTHR_nnn |

Data Migration Mapping Template

Use the following structure to map out Source to Target data maps. Seek approval of the field mapping from Business / Process Owners prior to development and testing of the Migration.

Notes on some columns:

- Reference ID (Ref #): is simply a unique row identifier.

- Not Null: is a Y/N indicator (actually, a leave blank or 'Y' indicator) as to whether the given field must contain a value.

- Default Value: is what to apply if a record does not otherwise contain a Source value for the given field.

- Business Rule ID: refers to the Migration Business Rules as described above. A cell may list multiple rules, as appropriate.

| Source (Extract) | Target (Load) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Ref # | Table / File Name | Field / Column Name | Data Type | Table / File Name | Field / Column Name | Data Type | Not Null | Default Value | Business Rule ID |

| 1 | |||||||||

| 2 | |||||||||

| n | |||||||||

Error Handling

Most Data Migration Design Patterns have corresponding logging capabilities. Accordingly, embed a review of such outputs within the Validation task of each Migration (Task 6).

Beyond the delivered capabilities, anticipate additional error or exception conditions, and identify corresponding corrective actions. As a result, include functionality to produce Exception reports in Tasks 3, 4 & 5 - Extract, Transform & Load - of each Migration.

Including the ability to generate exceptions within the multiple Tasks provides improved Quality Assurance of the data being processed. Moreover, it speeds Migration development and testing. Replace samples in the table below with exceptions appropriate to each Migration.

|

Exception ID

|

Exception Description

|

Corrective Actions

|

|---|---|---|

| EXID_001 | Record Count Not Matched | Raise exception if datafile record count does not match with Staging Table record count after upload. Exit program after writing Error Message to Log File. Rerun the Migration after removing empty rows in datafile. |

| EXID_002 | <Data Value> already exists | Raise exception in PL/SQL program if the Supplier given in datafile already exists in PO_VENDORS table. Exit program after writing Error Message to Log File. Rerun the program after removing the duplicate vendor from datafile. |

| EXID_nnn | ||

Audit Reporting

Data Audits are individual queries or logs which produce counts, frequencies, cross-references, etc. of data as it is processed. In general, these provide insights in to the structure and content of the data. In effect, they often resemble queries used in Data Profiling (Task 1).

At a minimum, build-in functionality to automatically produce Data Audits in Tasks 3, 4 & 5 - Extract, Transform & Load - of each Data Migration. Including them within the multiple Tasks produces cross-referenceable materials to validate processing.

There is no ' one-size fits all' list of audit values. Although, some common audit queries include:

- Parameters or criteria used.

- Counts of the number of records processed.

- Counts or frequencies of individual or combinations of key values.

- Counts or frequencies of types, categories, or other aggregations within the data structure.

- Identification of inconsistencies such as blanks or Nulls where none should exist.

- Cross-references of Field A vs Field B or other types of relationships.

Do not leave the audits until the Load. Indeed, it is best to look for them early (Profiling or Extract) and to have multiple passes at the same criteria.

Scheduling

Unlike Integrations, most Data Migrations are unlikely to use automated scheduling. Rather, most will rely upon manual user execution. However, the timing of Data Migrations is often more critical.

In this case, Scheduling refers to identification of predecessor and successor tasks. In fact, it often involves defining specific tasks which must be completed prior to Migration execution. For instance, the closing of an accounting period, or the processing of payroll for a given cycle. Similarly, it can involve defining specific tasks to complete after each Migration execution. For example, the posting of journal entries or the execution of some task.

File Management & Archiving

A key aspect of Data Migration is the ability to compile and maintain records of the data transferred from each legacy system into the Solution. If maintaining records of data sets ported from one system to another is desired, then define how to capture and manage this historical information. Of course, this capture and management may also apply to Error and Audit information, as well as to the operational and/or transactional data being migrated.

Testing

Use applicable Unit, Function and Integration Tests to verify Source-to-Target mapping and ETL tasks. In general, such tests correspond to the Feature & Stories used to implement each Migration. Successful completion of these tests occurs during Build Testing.

In addition to these tests, build into each Migration some level of post-execution Validation (Task 6). In time, these are for Business representatives to review and sign-off.

Afterwards, successfully Verified and Validated Migrations become inputs for Certification and Rollout Testing associated with Release in which delivery of a given Migration occurs. Refer to the Testing Approach for additional information.

Section 4 - Data Migrations Summary

Compiling information from the tables above, this section summarizes all Data Migrations from legacy systems into the Solution. Moreover, it offers an opportunity to arrange multiple Migrations by the Solution Evolution Phase during which to expect delivery.

Use Table SA3.4 to help estimate resources - staffing, funding, etc. - as well as timing applicable to Migrations. However, for actual Phase-by-Phase timing, refer to the section 'Approach per Solution Evolution Phase' later on in this artifact. Similarly, for additional information on any source systems, refer to the 'Legacy Systems' section within the Application Strategy.

Backlog Migration Features

If not already done, then now is the time to add Features to the Solution Backlog. To be clear, each proposed Migration should have its own, corresponding Feature. When adding each Feature to the Work Management System (WMS) it will be given a unique ID. This ID is also known as the WMS Feature Key.

Ensure the Feature Name, and/or Feature Summary is the same as the Data Migration Title which appears in the tables of this Data Migration Approach. Additionally, add the High-level Migration Description from Table SA3.2 to the Feature Description. If applicable, then also move Notes from Table SA3.1. Finally, associate each Migration Feature with the Solution's Standard Epic for Data Migration. At this point, each Feature has sufficient information to remain in the backlog.

In time, Feature Planning make each Feature "Ready" for subsequent implementation. Alternatively, for any proposed Migration(s) never approved for delivery, the backlog Feature becomes the historical record, describing the relevant justification and decision maker(s).

Table Values to Compile

Table SA3.4 summarizes all potential Data Migrations planned for the Solution. For each Migration, list the following:

- Process / Enabler: The Business Process or Technology Enabler which includes, or is directly related to, the given Data Migration.

- Data Migration Title: A name for the Migration, typically following the format of

[Legacy System ID / Name] to [Solution ID / Name] - [Data Object]. - Proposed Solution Phase: The suggested Solution Phase during which to expect initial delivery of the given Migration.

- WMS Feature Key: The ID or key from the Work Management System which identifies the specific Feature to enable the given Migration.

Table SA3.4: [Solution ID / Name] - Proposed Migrations

| Process Name | Data Migration Title | Proposed Solution Phase | WMS Feature Key |

|---|---|---|---|

Section 5 - Approach per Solution Evolution Phase

The final section of this Data Migration Approach defines which Data Migrations to expect during each Solution Phase. Previously, Table SA3.4 - Data Migrations Summary offered opportunities to review and organize the entire list of proposed Migrations for resource planning purposes.

Migrations by Solution Phase

At this point, tables in this section capture decisions that result from those earlier estimates and related resource planning. As a result, they also tie to the Solution Roadmap. Moreover, rows in each table align individual Migrations with guidance from the overall Data Migration Strategy.

Table Values to Compile

The header for each Table is 'Phase [Phase ID]: [Phase Name] - Data Migrations'. For each Phase, the values to compile in each table include:

- Phase ID / Name: From the Application Strategy, the Solution Phase during which to expect the Migration's initial delivery.

- Data Migration Title: The Feature Name (or Feature Summary) of the specific Migration. Ideally, following the form [Legacy System] to [Solution] - [Data Object].

- Data Migration Description: A copy of, or comparable to, the Migration's initial Feature Description.

- Source System: Name of the legacy system from which to migrate data.

- Target System: Name of the system to migrate data into.

- Data Object: From Table 3.2 in Data Objects & Stores above.

- Design Pattern: From the Data Migration Strategy, the pattern which the Migration is to apply.

- WMS Feature Key: The ID, or key, in the Work Management System which uniquely identifies the Migration in the Solution Backlog.

Table SA3.5(a): [Solution Phase ID / Name] - Data Migrations

| Phase ID / Name | Migration Title | Migration Description | Source System | Target System | Data Object | Design Pattern | WMS Feature Key |

|---|---|---|---|---|---|---|---|

End Template /

Optionally, include the following Checks & Balances.

Checks & Balances - Alignment to Other ITM Artifacts

This section is available in many Solution Strategy & Architecture artifacts, to help those who create, and use, this information see the "bigger picture".

Checks & Balances highlight where similar information appears in multiple locations. The reason to display info more than once is to help ensure alignment and consistency across all stakeholders. Each appearance offers a different perspective for a different audience. The more widely available such information becomes, the more likely it is to drive common understanding. As a result, this helps initiatives to progress more quickly and with fewer impediments.

Section 1 - Business Impacts

In short, Section 1 offers a high-level overview of the Processes or Enablers, depending upon Solution Type, and Data Objects which Migrations may affect. Accordingly, it extends and highlights information relevant to those orchestrating implementation.

Table SA3.1

Business Processes (or Technology Enablers) appearing in Table SA3.1 must also exist in the Application Strategy Table SS1.2: Future State Processes & Supporting Components. Unless the Process (or Enabler) appears in Table SS1.2, it is not yet approved for this Solution. Hence, no work should occur related to it. Furthermore, each Process should also appear in the corresponding Solution Context Diagram. For additional information on why the Migration exists, or where in the sequence of events it occurs, look to the corresponding Process Definition.

Similarly, each Data Object appearing in Table SA3.1 must also exist in the Application Approach Table SA1.2: Major Solution Data Objects. Specifically, Data Objects which appear in the With Migration column may appear either the In-Scope or Future-Scope columns of that table. Otherwise, Data Objects which appear in the Without Migration column may appear in the In-Scope, Future-Scope, or Out-of-Scope columns of Table SA1.2.

Similarly, each Data In/Out item appearing in Table SA2.1 should exist in the Task Flow Diagrams, or any parent diagram of the corresponding Process Definition. Although, it is unusual to identify Data In/Out icons for Migrations in Process Diagrams. However, if it was done, look to the diagrams or corresponding Process Detailing Matrix.

Table(s) SA3.2

To begin, any Data Object or Data In/Out item appearing in Table SA3.2 must also appear in Table SA3.1, described above. Moreover, each must also conform to the constraints described in the earlier reference.

A Solution Data Store listed in the table should correspond to descriptions which appear in vendor documentation or other, similar materials. In most cases, Data Stores should also appear in the Process Diagrams and Matrices.

All Related Process listed should also appear in Table SA3.1. Ideally, they are Defined Processes and hence have Process Definition artifacts associated with them. However, some Processes may also be undefined. If so, they will lack corresponding diagram and matrix details.

The Migration Title appears in several tables within this artifact. In each instance, the same title should appear to represent the same Migration. Additionally, each title should tie to the Name and/or Summary of the Solution Backlog Feature to which a proposed Migration relates. Moreover, that Feature should align to the Standard Epic for Migrations.

Section 2 - System-Level Mappings

This section seeks to provide insights into the potential complexity of Migrations.

Table(s) SA3.3

Any system appearing in Table SA3.3 as a Source System or Target System must align with related systems defined in Table SA1.9: Data to Migrate of the Application Approach.

Also, look for multiple occurrences of the same Table + Field values appearing in either Source or Target columns, or both. The more times the same values appear, the more complexity should be assumed for any Migration involving those values. Accordingly, ensure the estimate for such Features reflects the likely additional complexity.

Section 3 - Data Migration Common Functions

This section contains no tables. However, each of the Migration Flow Tasks 1 through 6 should have a corresponding Story in the Solution Backlog. Moreover, each of the six (6) Stories should relate to the same parent Feature, representing the individual Migration.

Section 4 - Data Migrations Summary

This section summarizes all potential Migrations. However, the appearance of a Migration in this section does not guarantee it will ever be created.

Table SA3.4

Each Process Name appearing in Table SA3.4 must correspond to one listed in Table SA3.1 and Table SA3.2 above. Likewise, the Migration Title should also correspond to values appearing in both of those tables.

The Proposed Solution Phase must correspond to one previously described in Section 2 of the Application Strategy, as well as Table(s) SS1.4: Solution Phase - SID-PHnn: [Phase Name]. Similarly, the WMS Feature Key must correspond to the Feature ID as it appears in the Solution Backlog.

Section 5 - Approach per Solution Evolution Phase

To conclude, this section prioritizes individual Migrations into the Solution Phase during which to expect delivery of the Migration.

Table(s) SA3.5

Firstly, the Phase ID / Name of Table SA3.5 must correspond to one described in Section 2 of the Application Strategy. Secondly, the Migration Title must correspond to the same value which appears in Table SA3.2 and Table SA3.4.

Thirdly, each Source System value must be a legacy system which appears in Table SA1.9: Data to Migrate of the Application Approach . Each Target System value must be the Solution ID from Table SS1.3: Solution Identification in the corresponding Application Strategy. Moreover, these values should correspond to Source and Target System values appearing in Table(s) SA3.3 above.

Fourthly, the Data Object value must correspond to one which appears in Table SA3.2 above. Similarly, the Design Pattern must correspond to one appearing in Table SS3.1: Data Migration Scenarios & Design Patterns of the Data Migration Strategy. Finally, the WMS Feature Key must correspond to a value which appears in Table SA3.4.

How to Develop a Data Migration Approach

In most cases, a Solution Architect or Application / Technology Architect facilitates the creation or update of this Data Migration Approach. However, they should do so in collaboration with affected Epic Owners as well as relevant Business / Process Owners. Additionally, they will require input from Subject Matter Experts familiar with each legacy system.

To create the initial version, use the preceding template content on this page. Otherwise, to update this Approach for a subsequent Phase, use the most recent version from a preceding Phase. Thereafter, follow the steps below to create, or update a Solution's Data Migration Approach.

Regardless of which Phase the artifact is for, completion of the current version occurs during Phase Inception. At that time, the long-term scope of the Phase, including which Migrations to deliver, is set. As a result, the finalized Approach per Solution Evolution Phase section defines the scope of Migrations for the next Phase.

Section 1 - Business Impacts

To start this Data Migration Approach, describe how potential Migrations relate to the individual Process(es) or Enabler(s) the Solution supports. Accordingly, complete Steps 1 and 2 to describe the Business Impacts of Migrations.

Step 1: Determine Affected Data Objects - Create / Update Table SA3.1

To begin adding Solution-specific content to the template, list each Process or Enabler as they appear in Table(s) SS1.4 of the Application Strategy. At a minimum, list the Future State Process(es) which apply to the pending Phase. Alternatively, list all Processes, regardless of Phase. The latter helps identify multiple Processes which may affect one another.

For each Process added to the table, review the corresponding Task Flows (Level 3 Diagrams). In short, parse through the diagrams, or corresponding Process Detailing Matrix, to identify referenced Data Objects. Of course, Object lists can begin prior to the Level 3 flows being complete. However, lists cannot be final until sign-off of each Process' Level 3 Diagrams occurs.

For each Data Object, determine if there is a desire to migrate such data into the Solution from any legacy system(s). Furthermore, determine whether an inbound transfer of such information relates to the given Process. At this point, a brief discussion with stakeholders is typically sufficient to identify desired, potential Migrations.

List all Data Objects for the Process in either the 'With' or 'Without' Migration column of the table. Basically, a Data Object should appear in either the 'With' or 'Without' column for a given Process. Although, the same object can appear in both columns though for different Processes. To clarify, put Data Objects in the 'With' column only when the data to move relates directly to execution of the corresponding Process.

Additionally, add the name of each Data In/Out icon appearing in the diagrams and which relate to a Migration. This offers another perspective to identify potential Migration work.

Optionally, add any information relevant to understanding, assessing, or prioritizing individual Migrations using the space for Notes.

Step 2: Identify Relevant Migrations - Create / Update Table SA3.2

Previously, Table SA3.1 identified all Data Objects associated with each Business Process. Furthermore, it identified which related Data Objects may require Migration from a legacy system. At this point, Table(s) SA3.2 highlight Migrations as they relate to an individual Process. This is to help align potential Migration work with other Process-related efforts as defined in the Application Approach.

To begin, look to each row in Table SA3.1 which identifies one or more Data Objects in the 'With Migration' column. Additionally, look to each row which lists one or more Data In/Out items. For each corresponding Process, create a Table SA3.2, giving each header a unique, one-letter identifier, such as Table SA3.2(a), Table SA3.2(b), Table, SA3.2(c), etc. Be sure each header includes the Process Name.

Secondly, for each Process, add a row to the table for each 'With Migration' Data Object. Likewise, add a row for each Data In/Out item. List these in the first column. Thereafter, identify the file / table name(s) within the Solution which store values for the given Data Object or Data In/Out item. For some Solutions, this may involve more than one Data Store. However, in most cases, there should be a relatively obvious 'main' file or table.

Thirdly, provide a brief description of the proposed Migration. In short, this is a quick what, when, and why to support the case for spending time and money on the Migration. Descriptions should involve no more than one legacy system. That is, if values for a Data Object are inbound from more than one system, then each system gets its own High-level Migration Description.

Identify Duplicates

Fourthly, look back to the 'Without Migration' column in Table SA3.1. If the Data Object referenced by the current row of Table SA3.2 appears in that column for any other Process, list the Process Name(s) as Related Process(es).

Finally, identify individual Migrations by giving each a unique title. A simple, yet effective, form is to identify the Source legacy system, the Target Solution, and the Data Object to exchange. For example, 'Oracle EBS to D365 - Customers'.

If two or more rows listing a Data Object and/or a Data In/Out item refer to same thing, then give each row the same Migration Title. This conveys that these are two views of the same Migration.

Quick Validations

At this point, conduct a few validations. Firstly, verify that the same Data Object does not appear in more than one SA3.2 table. If one does, then take a closer look at it. Perhaps additional detail is required to differentiate two seemingly similar Migrations. For instance, perhaps what was described as "Employee Data" is better described as "Employee Vacation Data" and "Employee Timesheets".

In some cases, Data Object values required for Process A might include Fields 1 & 2. Alternatively, Process B requires fields 2, 3 & 4. As above, the proper approach is to segment the different needs into separate, distinct Migrations. Later, discussions and decisions may determine whether to tackle multiple, related Migrations at one time, or to build one first, and extend it later.

For the second validation, verify that each High-level Migration Description references only a single related system. If any description references multiple legacy systems, then create a copy of the description for each system.

Afterwards, verify that each row in Table SA3.2 includes just one High-level Description. If a row contains multiple descriptions, then create a row for each. Repeat the Data Object and Data Store values for each. Additionally, update any Migration Title value(s) as appropriate.

If restating initial Data Objects occurs, either by segmenting into more detail, or aggregating into less, then make similar changes to Table SA3.1. Moreover, ensure similar changes occur to the corresponding Process Definition flow diagrams and matrices. Maintaining consistent information across artifacts is vital to ensure clear communications and manage expectations.

Validations are done when the same Data Object does not appear in more than one SA3.2 table, and each Data Object does not have more than a single High-level Migration Description.

Section 2 - System-Level Mappings

Previously, Tables SA3.1 and SA3.2 viewed Migrations from a Business-perspective. At this point, it's time to look from a Technology-perspective.

Completing this section often involves working with Application or Technical Architects, Business / Process Owners, or other Subject Matter Experts who are knowledgeable about the legacy system.

Step 3: Assess System-to-System Mappings - Create / Update Table(s) SA3.3

To begin, identify each Solution Data Store appearing in Table(s) SA3.2. For each, create a Table SA3.3, giving each header a unique, one-letter identifier, such as Table SA3.3(a), Table SA3.3(b), Table, SA3.3(c), and so on. Be sure to include the Data Store Name in each header.

Secondly, for each Solution Data Store, identify the relevant fields into which, or from which, to expect Migration values. To emphasize, the fields in question are meant to represent core, or common values. For instance, a Name, an ID, or Address values. Indeed, there is no expectation to map every possible field at this point. However, increasing the number of fields identified decreases the likelihood of encountering problems and delays later. Add Target Table, Field, and Data Type info to the table.

After identifying relevant Data Store fields, look to the High-level Migration Description(s), or Migration Title(s) from Table SA3.2 to identify each legacy system from which the Data Store may receive values. Accordingly, identify people knowledgeable about each legacy system. Thereafter, work with these individuals to determine equivalent related system tables and field(s). Add this information and align these Source values with the Solution fields previously entered.

There may not always be a 1-to-1 mapping of system-level fields. In some cases, multiple legacy systems may map to the same Solution tables. Alternatively, a single field in one system may equate to multiple fields in another. Similarly, the Data Types may not be the same from one system to another. Use the Translation Logic field to describe things to consider when designing individual Migrations.

Quick Validations

Of course, each table row describes a field-level map between a legacy system and the Solution. If there are multiple legacy systems which may port the same Data Store values, then expect the same fields to appear over multiple rows. Similarly, it is often the case that one related system can map a greater (or fewer) number of fields than another related system. At this time, simply map what is possible, and highlight anything needing additional consideration, such as multiple related systems exchanging values for the same Solution field.

There are two Table(s) SA3.3 validations to consider. Firstly, each Source System must be a legacy system defined in Table(s) SA1.8 of the Application Approach. Secondly, each row should include Solution values in the Target columns. In other words, if any row does not include Solution values as the Target, then something is wrong. Similarly, if both Source and Target values are Solution-related, then something may be amiss. In either case, re-consider the Solution's boundaries as currently understood.

Section 3 - Data Migration Common Functions

For the most part, this section merely offers guidance for each Migration to follow. There is no need to complete any table in this section.

Step 4: Define Common Functions

However, while there is no need to update any table, there are opportunities to do so. Modifying any table in the Flow, Business Rules, and Error Handling sub-sections prior to the start of a Solution Phase can offer a head start to the delivery of any Migrations during subsequent Phases.

For instance, content provided in these areas helps guide standardization and consistency across multiple Migrations. As a result, this often leads to faster delivery times, improved re-use of previously delivered items, and higher quality Migrations.

In another example, as Teams make design and delivery decisions, capture relevant outcomes for the benefit of subsequent Migrations. To explain, suppose during implementation of one Migration a decision determines that Supplier Name should be uppercase. Thereafter, update an appropriate Business Rule in this Data Migration Approach to reflect that decision.

Thereafter, that rule now applies to any subsequent Migration which involves the same field. Indeed, the capture of any 'lessons learned' which can help speed up delivery, increase consistency, and minimize problems is always a step in the right direction.

Section 4 - Data Migrations Summary

At this point, it is time to use the information compiled in the preceding tables to roughly estimate Migration development timing and sequencing.

Step 5: Summarize Proposed Migrations - Create / Update Table SA3.4

To begin Table SA3.4, look back to Table SA3.1 and Table SA3.2 for the list of Migration Titles. Add each, unique value to this table's Migration Title column.

Afterwards, for each row in Table SA3.4 with a Migration Title, look back to Table SA3.1. Find the Migration Title in that table and identify the corresponding Process (or Enabler) for which a Data Object appears in the 'with Migration' column of Table SA3.1. Add the Defined Business Process value to Table SA3.4.

Thirdly, identify a Solution Phase for which to recommend initial delivery of each Migration. Look to Application Approach for the Process(es) defined as being in scope for each Phase. If a Migration has already been scheduled during a prior Phase, then state the actual Phase instead of a proposed one.

Migration Timing

It is best to propose a Migration's delivery in any Phase prior to the one that involves implementation of a Process which depends upon the data. As a result, data from the legacy system(s) is available for testing during Process implementation. Phase-level separation often allows for plenty of time between needs for upstream data. As a result, this alleviates much pressure and overhead in dealing with trying to deliver such Features in a single timeframe.

Of course, it is possible to schedule Migrations in the same Phase as corresponding Process implementation. In doing so, Roadmap Planning must consider Feature sequencing. Basically, the sequence should be Migration before Process. For instance, within a Phase, it is best to schedule Migrations for an initial Release. Afterwards, schedule Process implementation for middle to latter Releases. In this case, expect to produce significantly more Test Data to allow for each Feature to progress without dependency on related Features.

Finally, once the Migration has a Feature in the Solution Backlog, identify that Feature in the WMS Feature Key field. As a result, anyone viewing this Data Migration Approach can seek additional information.

Section 5 - Approach per Solution Evolution Phase

Complete this final section of the Approach during Phase Inception, when the juggling of what's in, what's out, and what's next occurs. Of course, a preliminary draft, or recommendation, of the Migration(s) to include in any Phase is helpful.

However, finalizing a list of Migrations for a Phase depends upon decisions made for other Approach artifacts, such as the Application Approach. Moreover, it also depends on practical considerations, such as resource constraints. As a result, finalizing all such items is an output of each Phase's Inception.

Step 6: Define Approach for Phase - Create / Update Table(s) SA3.5

To begin, look to Section 2 in the Application Strategy for the current list of Solution Evolution Phases. For each Phase, create a Table SA3.5. Give each header a unique, one-letter identifier, such as Table SA3.5(a), Table SA3.5(b), Table, SA3.5(c), and so on. Be sure to include the Solution Phase ID / Name in each header.

Afterwards, add the given Phase ID / Name to the first column of each Table SA3.5. This is helpful when extracting specific rows for use outside of this Data Migration Approach.

Secondly, during discussions and negotiations which occur during Phase Inception, define the list of Migrations to deliver during the pending Phase. For each Migration in-scope for the Phase, add the Migration Title to an appropriate row in Table SA3.5.

Thirdly, look back to the prior tables in this artifact, and copy the relevant information to the Migration Description, Source System, Target System and Data Object columns. While this may seem redundant if using the recommended Title format, it allows for sorting each table for different audiences.

Fourthly, look to the Data Migration Strategy. If there is a recommended, or preferred Migration Scenario for a given Migration, then identify the corresponding Design Pattern which the Migration should apply.

Finally, if any Migration does not yet have an existing Feature to represent it in the Solution Backlog, then add one now. After adding the Feature(s), record their Feature Key in this table. This will direct anyone seeking additional information to the correct backlog objects.