The last of four (4) Test Development & Execution subjects looks at test actions as a Solution evolves over time.

Test Levels and Related Test Types

Grouping tests by levels helps focus attention on specific types of testing which are appropriate at designated points in the Solution's development cycle. Test Levels combine the concepts of Test Targets and Test Sequencing to provide a framework for test progression.

The concept of levels is to create foundations of quality and trust upon which later testing, with different test objectives, can build. It is important to understand the objective of each Test Type, and to ensure that when it's time for such testing that the testing be thorough, transparent and sufficient to establish confidence and trust in the functionality under test. Trying to achieve the objective of one Test Type by using another type, or in the same time and place as another, is a bad idea. Doing so will cause conflicts with other testing (e.g. trying to have both controlled and uncontrolled data in the same place at the same time) and will lead to a loss of confidence in all testing. Such conflicts can be mitigated, but that tends to require more work and more cost than simply segmenting the test cycles appropraitely.

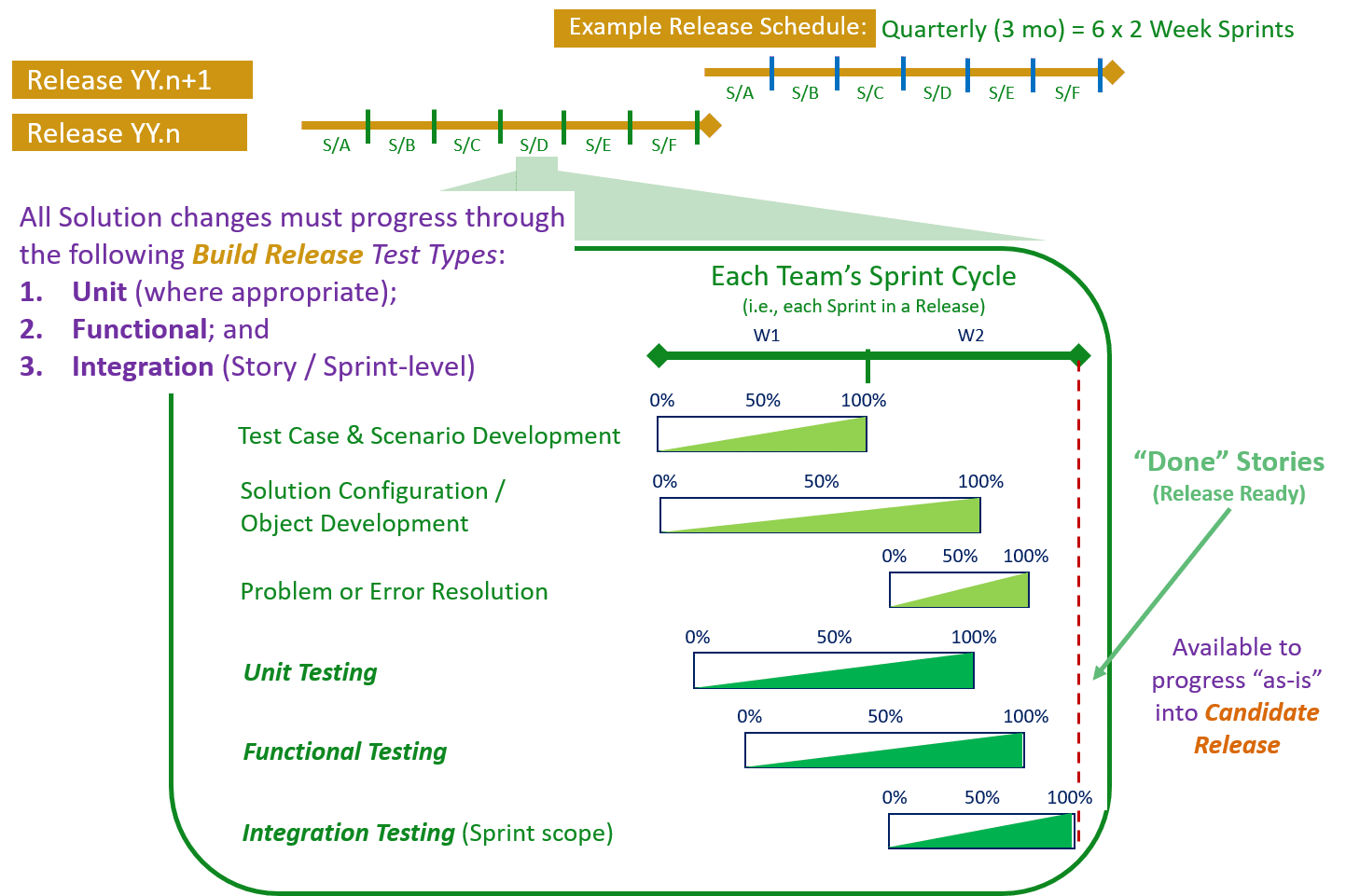

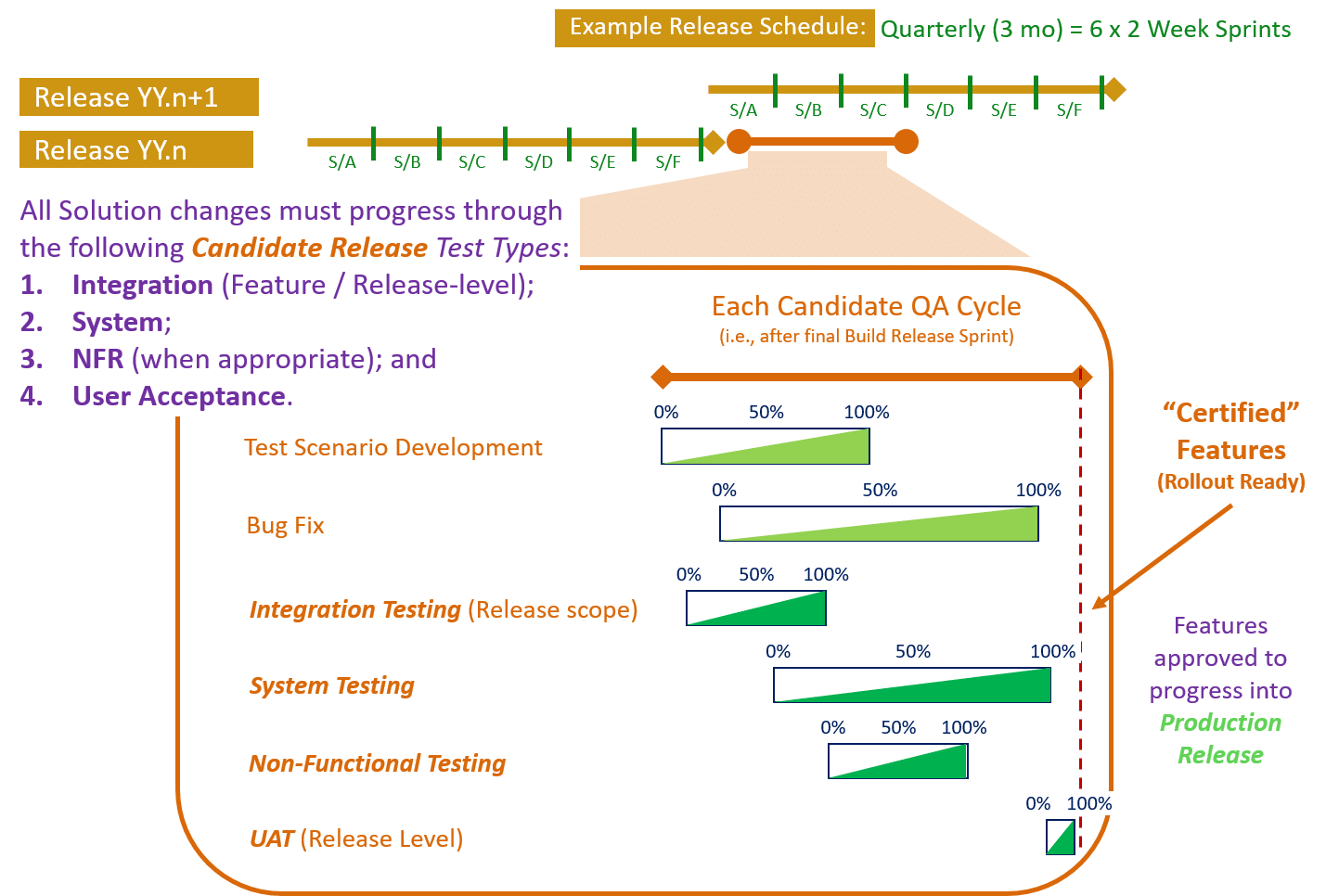

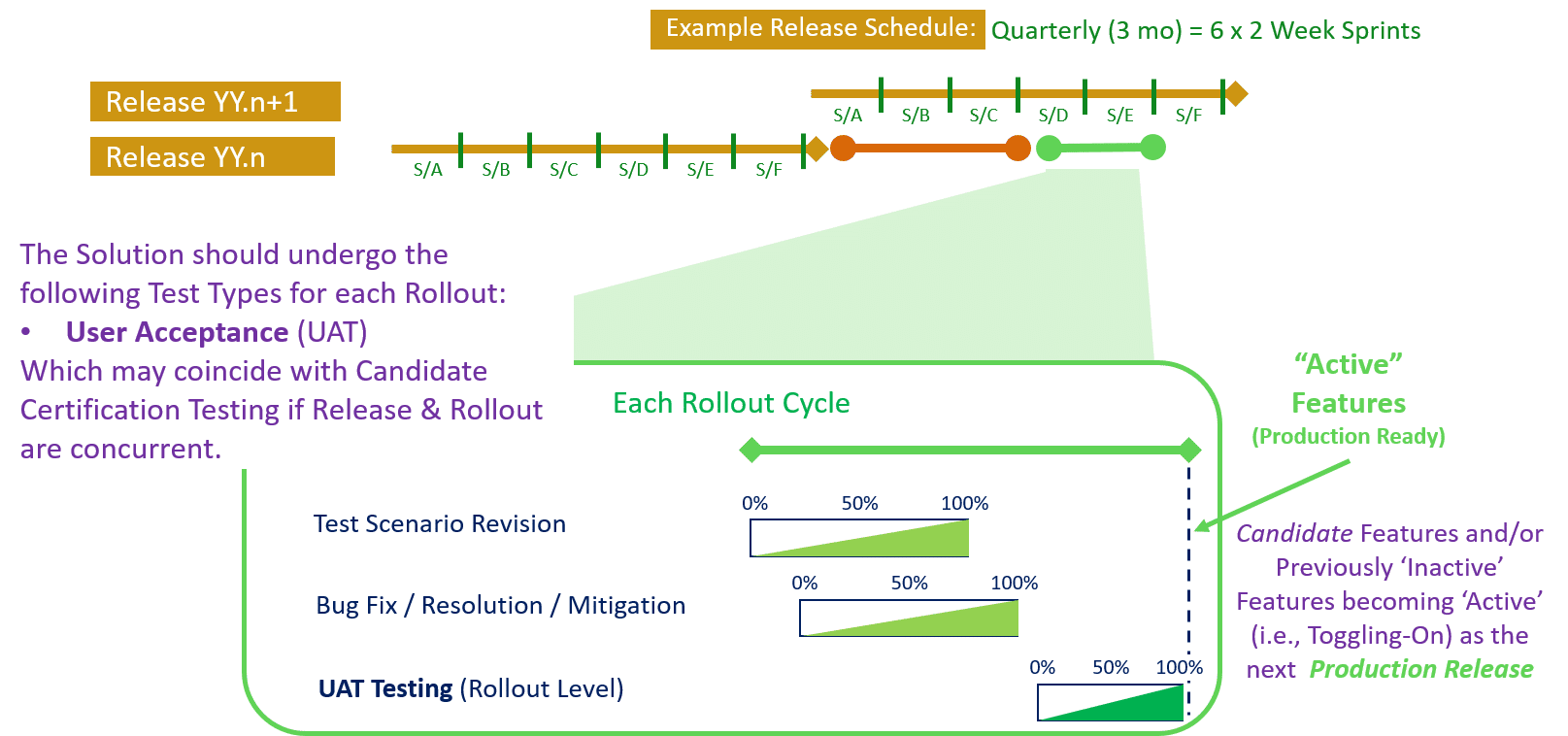

The examples below depict a Solution with a Quarterly Release schedule (i.e., having six (6) Sprints per Release). The concepts described can be adjusted to suit Monthly or Bi-Monthly Release schedules.

Sprint-Level Testing

The first level of testing occurs at the Sprint-Level. This is where initial Solution changes are made along with production of the vast majority of Test Components which will validate such change.

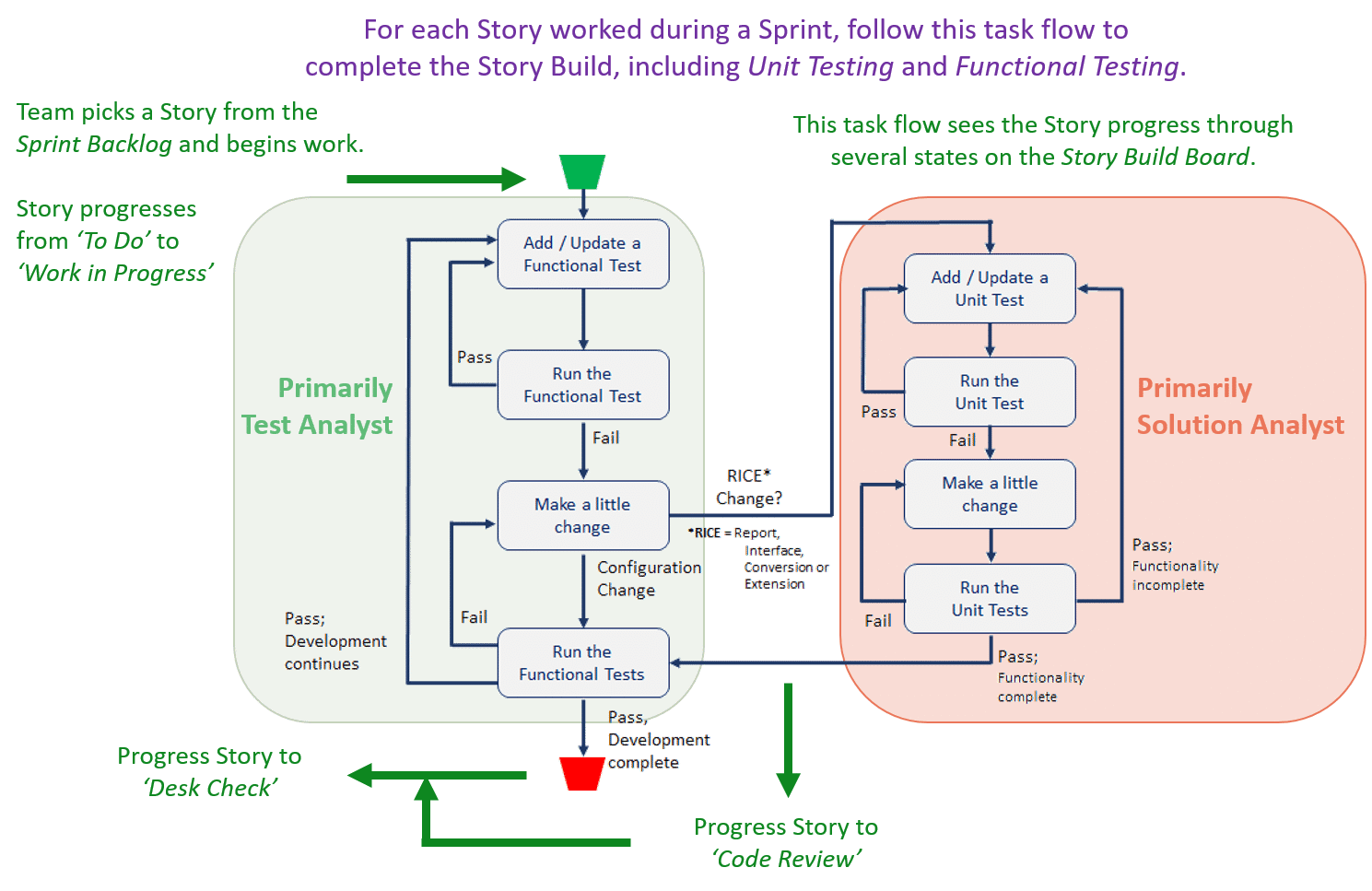

Unit Tests and Functional Tests should be produced in parallel (ideally, prior to) Solution configuration and RICE object development. A basic sequence of steps which Team members should follow is depicted in the Figure at right.

Unit Tests should be created and executed in conjunction with the development of any Report, Interface, Conversion or Extension (RICE) object. Development should not be considered complete until all planned functionality has been created and corresponding Unit Tests exist and pass.

As soon as Functional Tests and any portion of their corresponding Solution configuration and/or RICE object is available Functional Test execution should begin. Teams should not wait for completion of RICE object development to begin planning and executing tests. They should coordinate development and testing activities such that incremental testing begins early and is repeated often. Ideally, incremental functionality and incremental testing should be made available multiple times per day.

Once a Story passes Functional Testing, it is promoted from a developent environment to a test environment for further Integration Testing.

Stories which pass both Functional & Integration Testing are demonstrated to the Solution Owner who will either 'Accept' the Story, at which time it's consider "Done", or 'Reject' the delivered functionality (i.e., meaning more changes are needed).

- Sprint-Level Testing occurs primarily against a Build Release, as Stories from the Sprint Backlog are worked.

- Sprint-Level Testing may also occur for a Candidate Release, as Bugs from the Sprint Backlog are worked.

Sprint-Level Test Types do not require individual Test Plans. Rather, these are within the scope of Build-Level plans (see next). That said, if the Team feels creating a plan for one or more Sprint-Level test types would be useful, they should do so.

Release-Level Testing

The second level of testing, Release-Level, is primarily Sprint-Level testing but over the set number of Sprints per the Solution's Release Cadence.

The following types of testing are to be completed within each Sprint.

- Unit Testing

- Functional Testing

- Integration Testing (Sprint scope)

Both Functional and Integration Tests should extend and/or expand (as appropriate) over each Sprint as new functionality is added during the Build Release.

While functionality is delivered and tested Story-by-Story, by the end of the Build Release's Last Sprint the tests must cover the entirety of each Feature being delivered. Recall that a Feature is considered "Done" when all Stories related to it are "Done". So by the time the final Story is produced, corresponding tests should be available to validate the whole Feature, as well as its individual Stories.

The diagram above shows a rough alignment of development and test activities during each Sprint. 0% at the left represents work yet to begin; 100% at the right represents all work being completed. Sprint-level testing is required for any change made due to a Feature, Story or Bug.

Sprint-Level Testing can occur in the following locations:

- Build Release testing occurs primarily in the DEV1 and TST1 environments. Unit and Functional Tests of new functionality resulting from Features, Stories or Build Testing Bugs occurs in DEV1, while Integration Testing occurs in TST1.

- Candidate Release testing occurs primarily in the DEV2 and TST2 environments. Unit and Functional Tests of priority Certification Testing Bugs occurs in DEV2, while their Integration Testing occurs in TST2. Any changes made here must be merged back to the current Build Release so the changes become part of the ongoing Solution. If they are not merged back, these changes will disappear when the current Build Release becomes the next Candidate Release.

- Production Release testing of Hot-fixes occurs primarily in the DEV3 and TST3 environments. As with all Bug fixes, these changes must be merged backwards - to both the Candidate Release and Build Release - in order to remain in the Solution over the long-term.

Build-Level Test Types should have individual Test Plans. Mapped out after the Release Planning Event and updated after each Sprint Planning Event these plans may be altered to reflect the actual progress within the Build Sprints. Individual Stories do not need Test Plans; Stories are simply not "Done" until all of the related tests have been completed successfully. However, each Feature should have related Test Plan.

Candidate-Level Testing

For Solutions which use it, the third level of testing is Candidate-Level. These tests occur after Build Release's Last Sprint concludes and 'Accepted' Features are added to the Candidate Release. The Change Sets accepted at the end of a Build cycle are combined and a new Candidate Release Branch is created. The Features (and Stories) on the Branch represent the next advancement of the Solution targeted for Production.

This newer, larger Solution must undergo additional testing that was not (and could not) be conducted within each Sprint. This Certification Testing seeks to ensure that all changes within the Solution work properly together, as well as with other, related Solutions if appropriate. The following types of testing apply to the Certification Stage of each Candidate Release.

- Integration Testing (Release scope)

- System Testing

- Non-Functional Testing

- User Acceptance Testing (Release scope)

The diagram at right shows the rough alignment of test cycle and break/fix activities during each Candidate Release Certification cycle. 0% at the left represents work yet to begin; 100% at the right represents all work being completed over the span of Certification.

Candidate-Level Testing occurs in the following locations:

- Candidate Release testing begins in the TST2 environment. After the creation of each new Candidate Release, the prior TST2 environment is wiped clean and the new Candidate Release Branch is deployed. Upon successful completion of a Smoke Test, Integration Testing begins in TST2 and the same branch is deployed to DEV2 in case Bug fixes are required (which are likely). Controlled Data is then loaded into each environment.

- Once Integration Testing provides sufficient validation to warrant subsequent types of testing, the Candidate Release Branch is deployed to the QA2 environment over a recent copy of Production. These actions simulate a Move to Production for the Candidate Release. The remaining Certification test cycles now get underway.

- System and NFR Testing occur in QA2 using Uncontrolled Data Sets. If thoughtfully designed, these test cycles can often occur in parallel.

- All Features within the Candidate Release are evaluated for inclusion in UAT. It is common for some Features to have failed earlier tests or for the Customer to otherwise feel they are not ready for Production. The results of these evaluations feed into Production-Level Testing.

Separate Test Plans should be prepared for each Certification cycle and for each Test Type within.

These Test Plans are produced and executed by a QA group. Delivery Teams cannot be expected to manage and execute these tests. While each Certification cycle is underway, the Team(s) are busy working on the next Build Release and managing Sprint-Level & Build-Level Testing.

Production-Level Testing

The final level of testing occurs at the Rollout of new changes. While Release-level testing validated that one or more Features was ready for promotion to production, the determination of which Features to rollout to which User Communities and at what time is a separate issue.

At the conclusion of the Certification-Level tests, any unresolved Defects are addressed. Since there is no further development at this point (no time remains) addressing Defects involves:

- a) Toggling-Off Features which are not approved for Production;

- b) removing select Features and Stories from the Release; or

- c) otherwise identifying work-arounds.

The results of these decisions are used to create the next Production Release.

Just as a Candidate Release is assembled from Features 'Accepted' after Build Testing, a Production Release is assembled from Features still 'Accepted' after Cerfication Testing. Features delivered in earlier Releases but which have remained inactive may also be enabled at this time.

This increment of the Solution now undergoes a final test cycle. Rollout Testing seeks to ensure that all active components work properly together, that inactive components are unavailable, and that any errors, Defects or issues previously identified have been mitigated in an acceptable manner. The following types of testing apply to the Rollout Stage of each Production Release.

- User Acceptance Testing (Rollout scope)

Production-Level Testing occurs in the following location:

- Production-Release testing occurs in the QA2 Environment. Rollout Testing typically occurs immediately after Candidate-Level Tests are complete, often making it look like a single event. At any time after successful System Testing, 'certified' Features may be included in a Rollout. Once the scope of the Rollout is established (features 'Accepted' and Toggled-On / -Off) QA2 is refreshed with a current copy of Production. The new Production Release Branch is deployed, rather than the prior Candidate Release Branch, and Acceptance Testing begins.

The diagram at left shows the rough alignment of the test and potential, though not recommended, break/fix activities during each Rollout. 0% at the left represents work yet to begin; 100% at the right represents all work being completed.

Again, separate Test Plans should be prepared for each Test Type for each Rollout cycle. In most cases, these should re-execute earlier tests in which problems were encountered. Acceptance Tests should never introduce new Test Cases or Scenarios which have not previously been executed in some earlier test cycle.