Environment Orgin & Refresh

The fourth of five (5) subjects in Solution Environments describes the source content for each Environment and the frequency and means by which that content is refreshed.

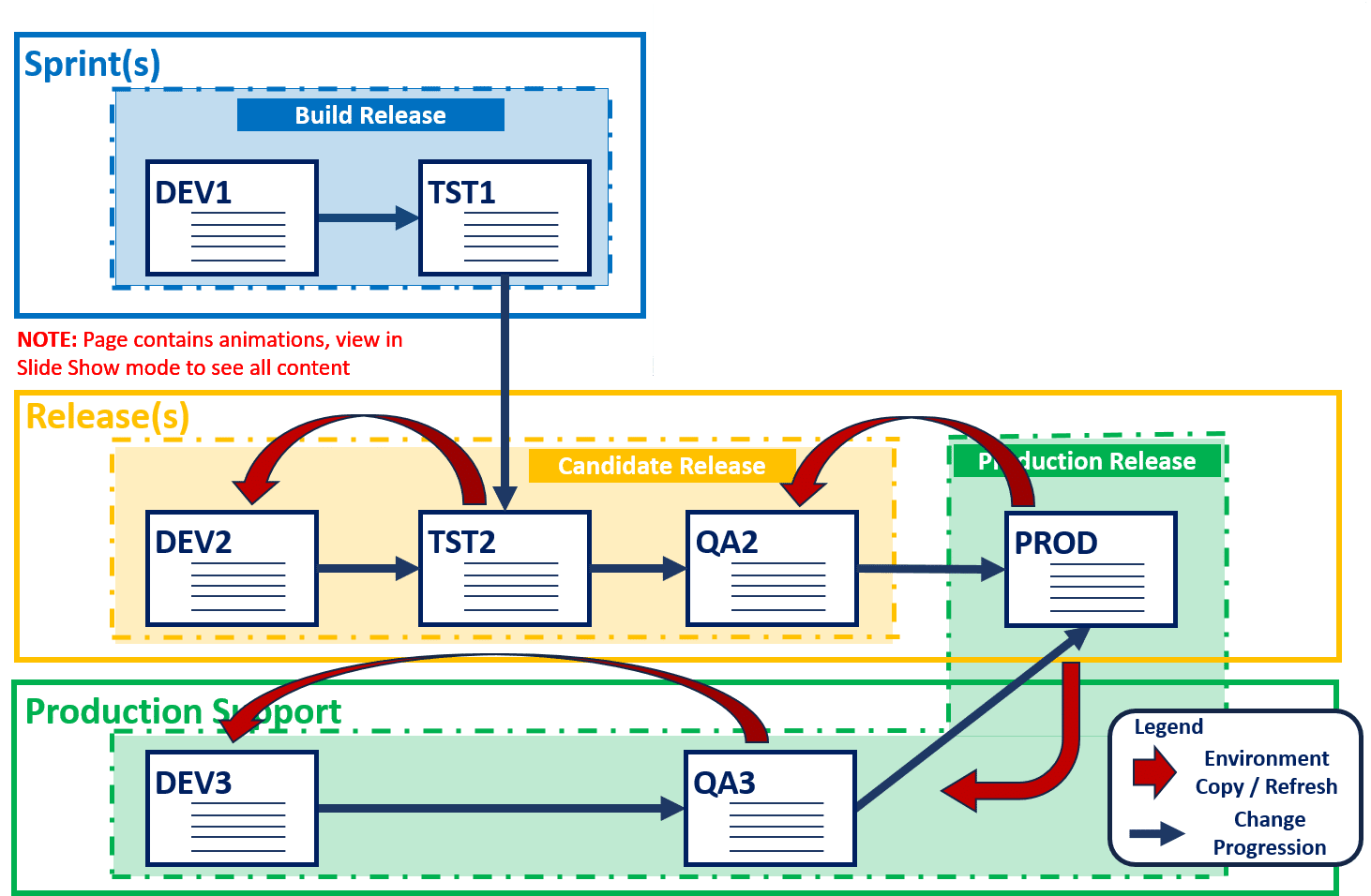

Environments - Creating & Updating

The diagram below depicts each Environments origin, including the Code Set deployed and the state of Configuration, Operational and Transactional data, as well as the frequency with which each of those components is refreshed for any Product following the IT Business Product Solution delivery approach. Refer to the corresponding PowerPoint slideshow section below for an animated description which provides additional information and a better explanation for the sequence of events. For additional information on how changes process, also refer to the Reference Materials for Code Set Management and Reference Materials for Testing Approach.

Per Environment

to migrate

Production Content Environments

Both the PROD and QA2 environments should contain the same (or similar) content of:

- a) Objects (i.e., Vendor Delivered Code + RICE - Reports, Integrations, Conversions & Extensions);

- b) Operational Data (i.e., application Configuration); and

- c) Transactional Data (i.e., the data generated and managed by Objects & Operational Data).

The QA2 environment is refreshed as a copy of PROD at the beginning of each System or UAT test cycle. The actions taken during this refresh are intended to mirror those that will be required when the next Release is eventually deployed to PROD.

There are very few situations where an additional copy of PROD should ever need to exist.

Non-Production Size & Content

All other environments can be smaller and may reside on smaller and/or shared, infrastructure. When possible, virtual environments can be an excellent approach to these environments.

DEV and TST environments should contain source versions of Objects and representative levels of Operational Data. Full Production levels should not be needed.

Most Transactional Data should be sourced from managed data sets, designed to support Regression, Functional and Integration testing. Additional Transactional Data will be generated as part of development and/or testing. At any time, any DEV or TST environment should be capable of having its Transactional Data purged and reloaded (i.e. reset).

Refresh Scripts

Over the Solution's lifetime there will be many requests to refresh an Environment. The two most common refresh scenarios are cloning and resetting Environments. Cloning an Environment occurs when DevSecOps uses a copy of a source instance create a new Environment or replace an existing one. For instance, after installation and configuration of a DEV Environment it is common to simply clone that Environment to create a corresponding TST Environment. Similarly, prior to each new Candidate Release test cycle, a copy of the current Production Environment replaces the existing QA Environment. Resetting an environment occurs when the application and code are not changing, but data it

Following creation of a clone, the desire is to have the application, configuration and code, but not the corresponding data.

Shortly after installation of the initial application, one of the first tasks for DevSecOps is to develop scripts to safely and appropriately remove data from any Environment. Most applications rely upon dependencies, constraints, and indexes of related keys across tables in their database. As a result, producing these scripts is not as easy as simply deleting all data from all tables.

First, the scripts must separate transactional data, from operational and configuration data. For most Solutions, scripts to remove transactional and operational data will be most helpful. The scripts to remove transactional data must first identify the relevant tables. For some large enterprise Solutions, this can involve hundreds of tables. After identifying the transactional tables, it is then a matter of determining any dependencies. To avoid the scripts corrupting data it is important that independent transactions be removed prior to removal of dependent data.

After identifying the correct sequence, it is then a matter of choosing the most effective way to remove data. When possible, simply dropping data from the table is often the fastest. Alternatively, the transactions may be deleted from the table. However, deleting data produces rollback transactions and other effects which can slow the process. For the largest Environments, using this method may require many hours for the scripts to run.

If producing scripts to remove operational or configuration data, follow the same sequence as use for transactional data.